When it comes to the ins and outs of Google’s crawling strategy, URL parameters are a big deal—and not always in a good way!

These nifty little add-ons can help filter content and create dynamic URLs, but they also bring along some baggage.

For instance, without a proper strategy, you might just create a chaos of infinite URL spaces that could leave Google’s crawlers scratching their heads.

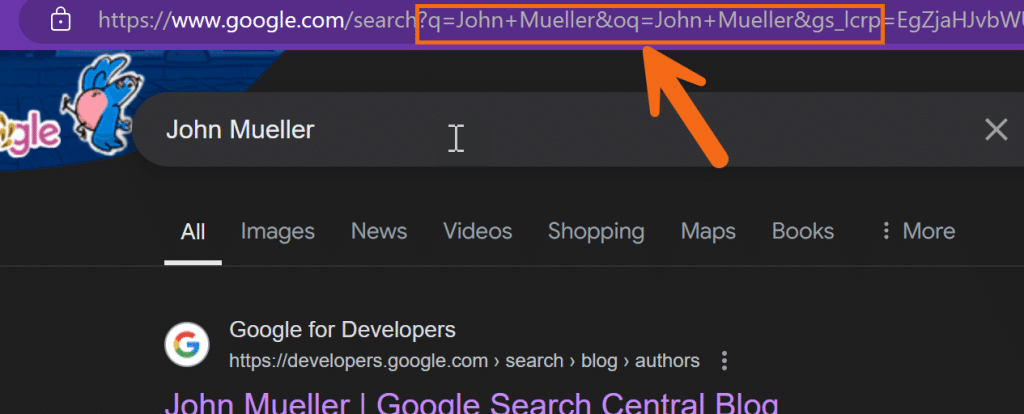

Google’s Gary Illyes and John Mueller Discussed it in their new podcast on Google Webmaster’s YouTube channel.

So, let’s dive into how URL parameters can impact crawling efficiency and explore the potential hiccups Google faces while navigating through all that parameterized goodness. Buckle up; it’s going to be a fun ride through the URL jungle!

What are URL Parameters?

Alright, let’s break it down!

URL parameters are those clever bits of information added to the end of a link that help us pass data around on the web.

They usually come after a question mark `?` and are formatted as key-value pairs, like this: `?color=blue&size=large`. Think of them as little notes that say, “Hey, this is what I want!”

For example, if you’re shopping online and your link looks something like `www.example.com/shoes?color=red&size=10`, those parameters tell the site to show you red shoes in size 10.

Super handy, right?

But here comes the kicker—while they make our online experiences more tailored, they can also lead to a tangled web of duplicates if not handled with care. Imagine a site having a version for every color, size, and style of a shoe.

Suddenly, the crawlers are overwhelmed with choices instead of just hitting the nail on the head with one clean URL!

So, while URL parameters are like sprinkles on your web experience cupcake, too many can start to taste a little messy.

How Google handles URL parameters

When it comes to URL parameters, Google takes a thoughtful approach to ensure efficient crawling without unnecessary chaos. Here’s a breakdown of how Google handles these sneaky little modifiers:

- Understanding Parameters: Google understands that not all URL parameters are created equal. Some provide useful filtering, while others might lead to duplicate content. As John points out, “…we don’t know based off of the URL like we basically have to crawl first to know that something is different.”

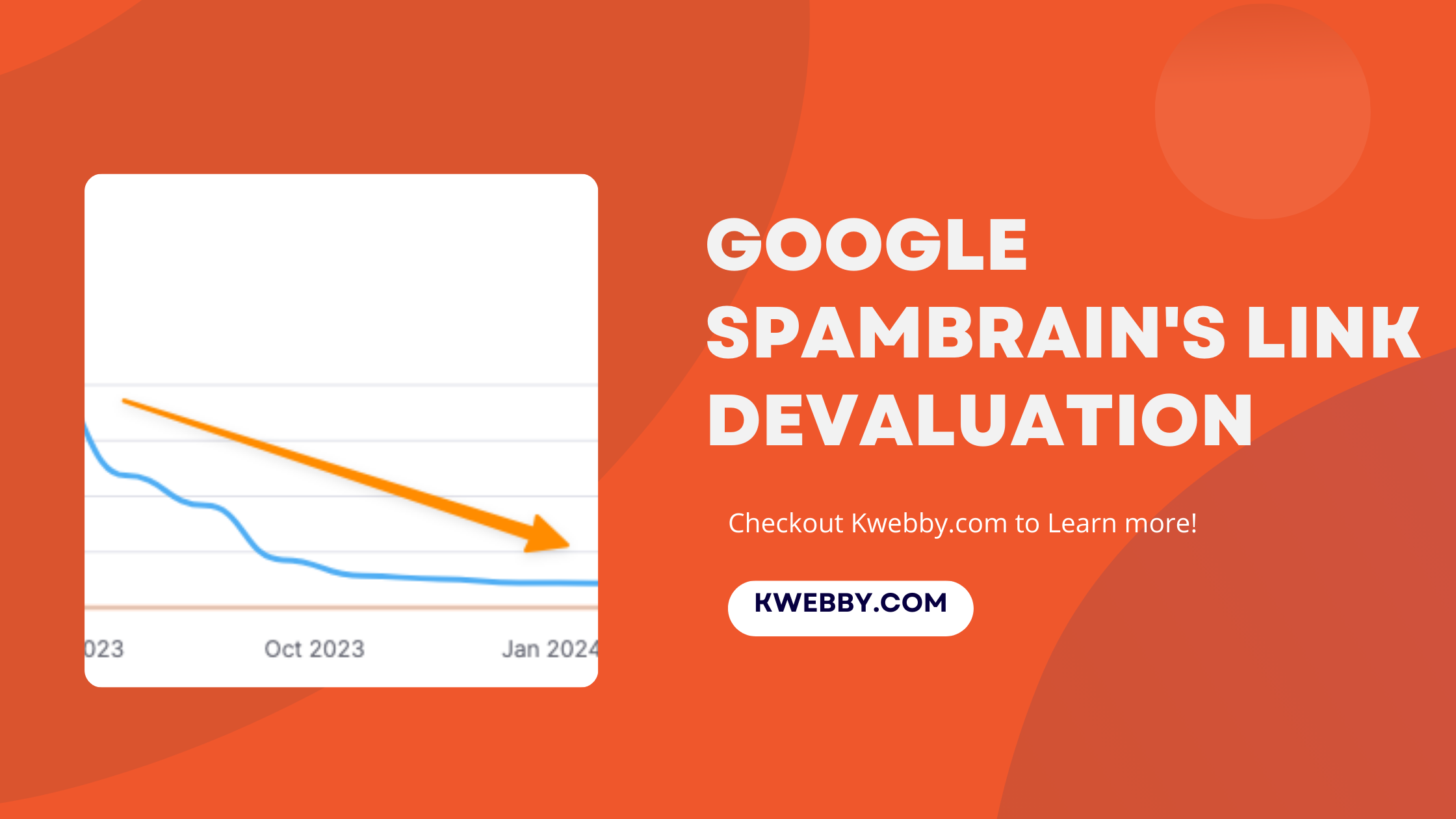

- Crawl Budget Considerations: If a site has an infinite number of parameters leading to duplicate content, crawls can be unnecessarily strained. Gary emphasizes, “If you have a thousand URLs instead of 10, that’s a big difference.” This means it’s crucial for site owners to manage parameters effectively to optimise crawl budgets.

By understanding URL parameters and how Google interacts with them, webmasters can create a more efficient crawling environment, benefiting both their site and its visibility in search results.

Common issues with URL parameters

When it comes to managing URL parameters, there are a few common hiccups that can arise, and understanding these can make all the difference in Google’s crawling efficiency. Here’s a rundown of some of the insights gleaned from the recent podcast with members of the Google Search team:

- Duplication Dilemma: URL parameters can lead to an explosion of duplicate content, making it difficult for Google to distinguish which versions to prioritize. As John aptly put it, “We don’t know based off of the URL; like we basically have to crawl first to know that something is different.” This makes it crucial to manage how parameters are utilized to prevent this duplication headache.

By being proactive about how URL parameters are structured and managed, site owners can enhance their site’s crawl efficiency and ultimately improve their search visibility.

Best practices for site owners

To keep those pesky URL parameters from causing a crawl chaos, here are some best practices that site owners can implement:

- Prioritize Clean URLs: Aim for simplicity! Try to create user-friendly URLs that don’t rely heavily on parameters. The cleaner the URL, the easier it is for Google (and users) to understand what the page is about.

- Utilize Robots.txt Wisely: Leverage your robots.txt file to control which parameters Googlebot should ignore. This helps mitigate the crawling of unnecessary URLs and keeps the focus on the important content. Remember, blocking from this angle can save time and effort!

- Foster Quality Content: Ensure that your content is high-quality and updated regularly. This means that engaging, relevant content can encourage Google to crawl effectively, rather than wandering down the rabbit hole of unimportant parameters.

- Implement Canonical Tags: Use canonical tags to indicate your preferred version of a page. This can help Google understand which URL to prioritize and combat duplicate content that arises from multiple parameter combinations.

- Monitor Crawl Stats in Search Console: Regularly check your crawl stats to glean insights into how Google interacts with your site. John highlights the importance of these stats, stating, “…look at the stats and it’s like, oh it takes on average like three seconds to get a page from your server.” Such data can guide you in improving your site’s performance.

By applying these best practices, site owners can streamline their URLs and ensure a smoother crawling experience for Google, all while enhancing their site’s visibility and efficiency!