Automated scraping is a major performance killer as we saw during the Google Search Console SEO office hours in August 2024.

A site that’s being scraped is hit with severe consequences including increased server load and decreased responsiveness. When someone asked about persistent scraping issues even after implementing common prevention methods like IP blocking the answer was to provide the steps to mitigate.

You need to consider both technical and external support to manage and reduce the impact of aggressive scraping.

Here are the steps to deal with this, including finding the source of unwanted traffic, using traceroute tools and a reliable Content Delivery Network (CDN) to make your site more resilient to malicious bot traffic.

Google Answers: How to stop targeted scraping

During the Google Search Console office hours a question was asked about the ongoing scraping issue:

“Our website is experiencing significant disruptions due to targeted scraping by automated software leading to performance issues, increased server load, and despite IP blocking and other preventive measures, the problem persists. What can we do?”

The answer was to provide a structured way to fight back against aggressive scraping. Here are the steps to reduce the impact:

- Find the Source of Traffic:

First, find the source of the traffic. As the Google’s Mueller says;

“This sounds like a distributed denial of service if the crawling is so aggressive that it causes performance degradation.”

Use WHOIS to find the hosting provider of the suspicious traffic and send an abuse report.

- Use Traceroute Tools:

If you can’t find the network owner, use traceroute tools. This will allow you to trace the path of the packets to your server and see the route the scraper’s requests are taking.

- Use a Content Delivery Network (CDN):

Another way to intervene is to use a CDN. The experts said;

“CDNs often have features to detect this kind of bot traffic and block it.”

By using a CDN you not only reduce server load (since it distributes traffic away from your main servers) but you also make your site more resilient.

You can reach out to the CDN’s customer support to make sure it won’t block legitimate search engine bots as they are usually recognized and allowed.

By following these steps suggested by Google’s Mueller, you can reduce the impact of targeted scraping and have a more stable and responsive site.

Other Articles from Podcast;

- How to Resolve Wrong Currency Shown on Google’s Rich Snippets? Google Answers

- How to remove Expired Domains URL From Google Search Console?

- Google Suggests How to Use CDN for Images for SEO

- How to handle 404s for SEO? Google Warns & Solution

- How to handle multilingual websites in SEO? Google Answers

How to Block Targeted Scraping on Your Site

#1 Block All Bad Bot Crawlers Using robots.txt (List)

To prevent scraping on your site one way is to use robots.txt to block known bad bot crawlers. This file tells compliant web crawlers which part of your site they can access.

By blocking bad bots, you can improve your site’s performance and reduce disruption caused by malicious automated traffic.

Here’s how to do this step by step:

- Go to Your Site’s Root Directory:

Use your FTP client or web hosting file manager to go to your site’s root directory where your existing robots.txt file is located.

- Create or Open robots.txt:

If you don’t have a robots.txt file, create one. If you already have one open it.

- Add Bad Bot Blocks:

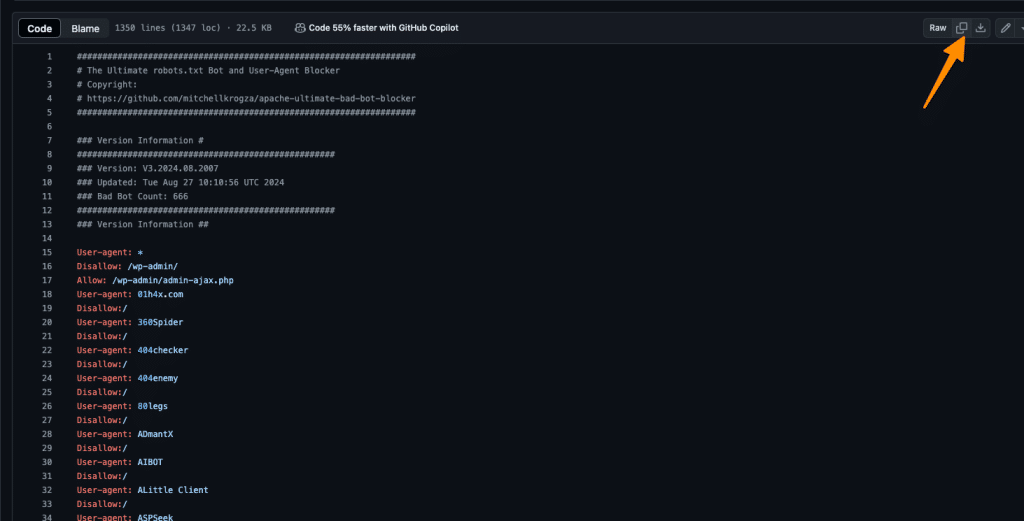

Go to Apache Ultimate Bad Bot Blocker. There you’ll find a list of user-agent strings for known bad bots. Copy the lines that contains these entries.

- Paste the Entries into robots.txt:

In your robots.txt paste the copied entries. Your file should look like this:

User-agent: BadBot

Disallow: /

User-agent: AnotherBadBot

Disallow: /

Replace “BadBot” and “AnotherBadBot” with the actual bot names you want to block.

- Save and Upload robots.txt:

Save the file and upload it back to your site’s root directory. If prompted overwrite the existing file.

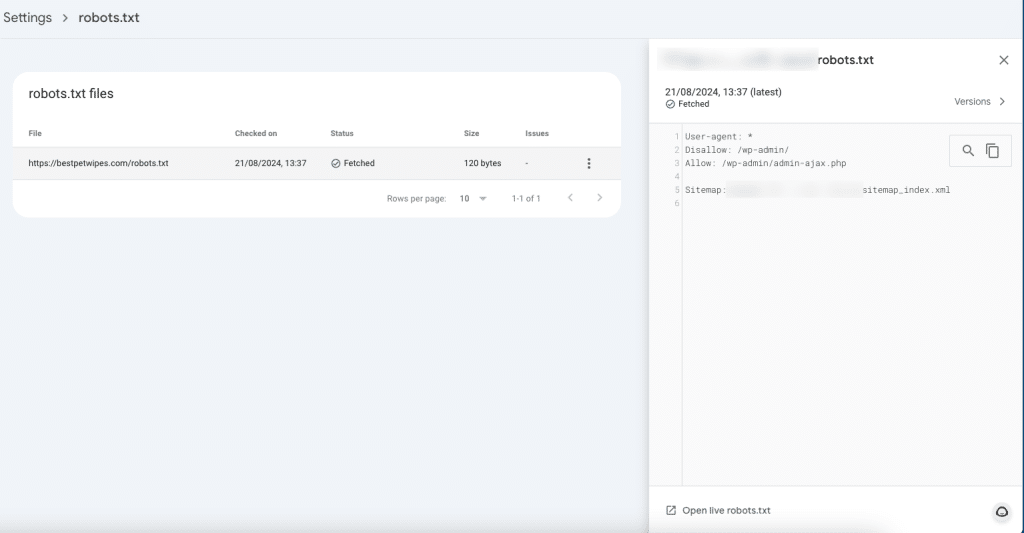

- Test robots.txt:

Use Google’s Robots Testing Tool to check if your robots.txt is correct and the crawlers you blocked are indeed blocked. This is important to confirm your settings work.

By following these steps you can block unwanted crawlers from your site and improve your site’s security and performance.

#2 Cloudflare Super Bot Fight Mode

To add an extra layer of protection against scraping and malicious bot activities use Cloudflare’s Super Bot Fight Mode.

This feature will automatically block bot traffic, allow legitimate search engine bots and block aggressive and harmful crawlers. Follow these steps to configure Super Bot Fight Mode:

- Log in to Your Cloudflare Account:

Go to your Cloudflare dashboard with your credentials.

- Select Your Domain:

Select the site you want to protect from the list of domains in your account.

- Go to Security:

In the dashboard go to the ‘Security’ on the left menu and select it.

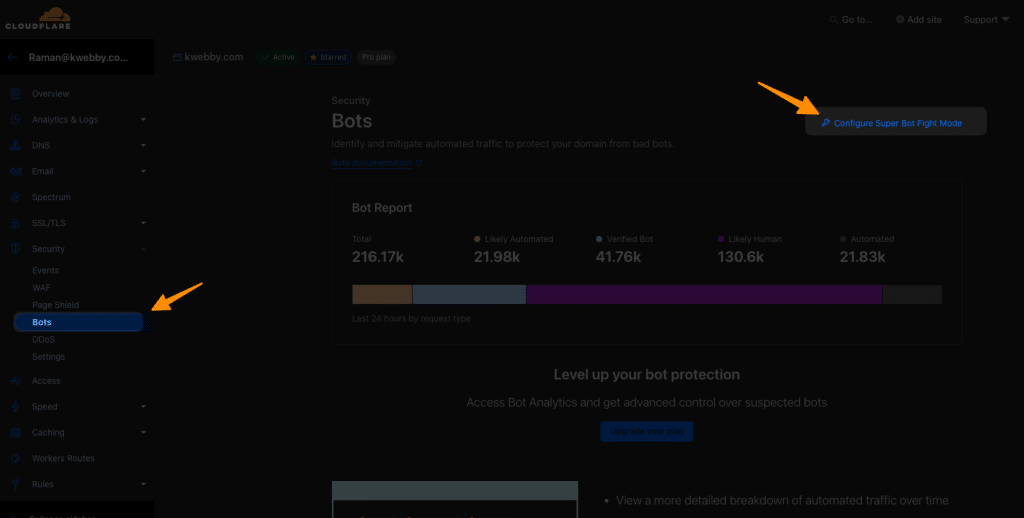

- Go to Bots:

In the Security settings, go to the ‘Bots’ Option and select “Configure Super Bot Fight mode”. This is where you can manage bot traffic.

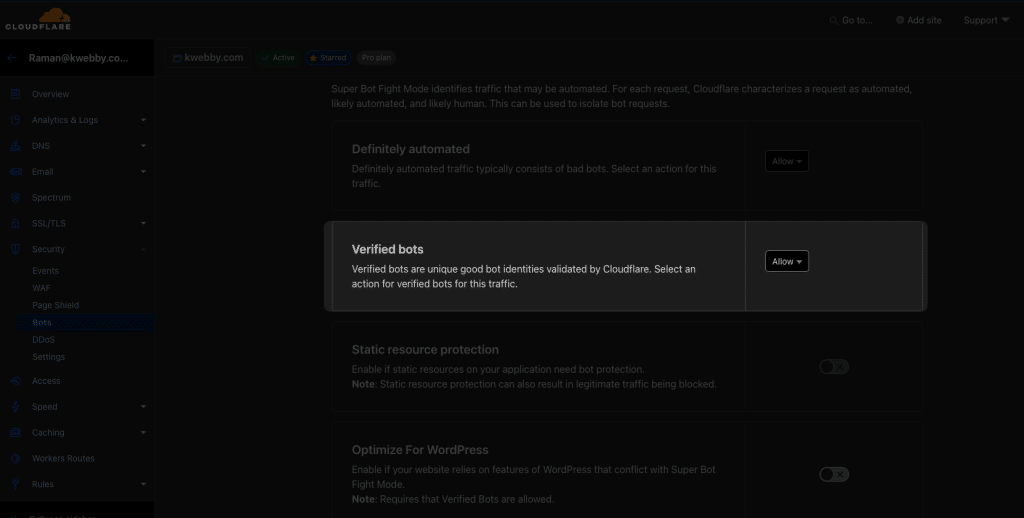

- Allow Verified Bots:

Under Super Bot Fight Mode settings make sure to allow bots from Google, Bing and other legitimate search engines. This is important so your site’s SEO isn’t affected by blocking useful crawlers.

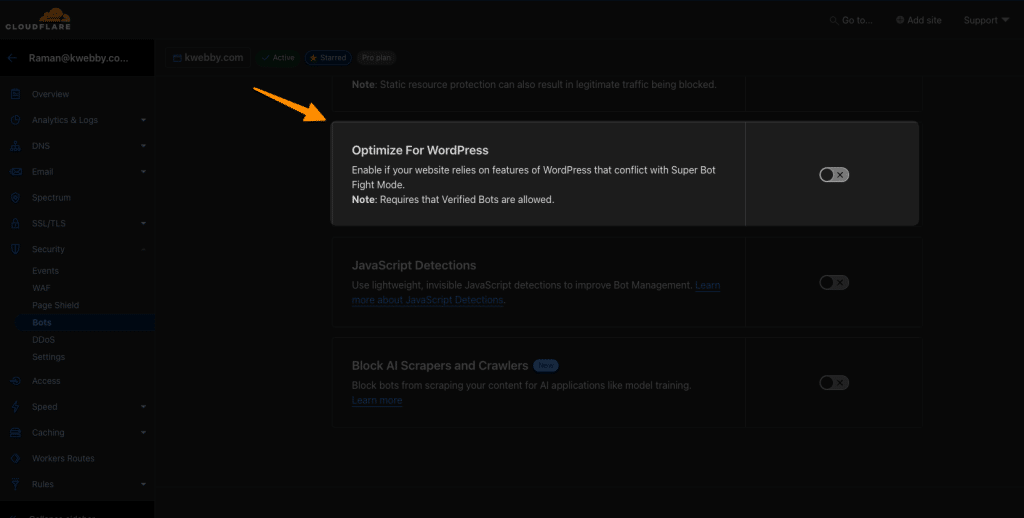

- Optimize for WordPress (if applicable):

If your site is on WordPress make sure to enable “Optimize for WordPress”. This optimizes performance and works with common WordPress plugins.

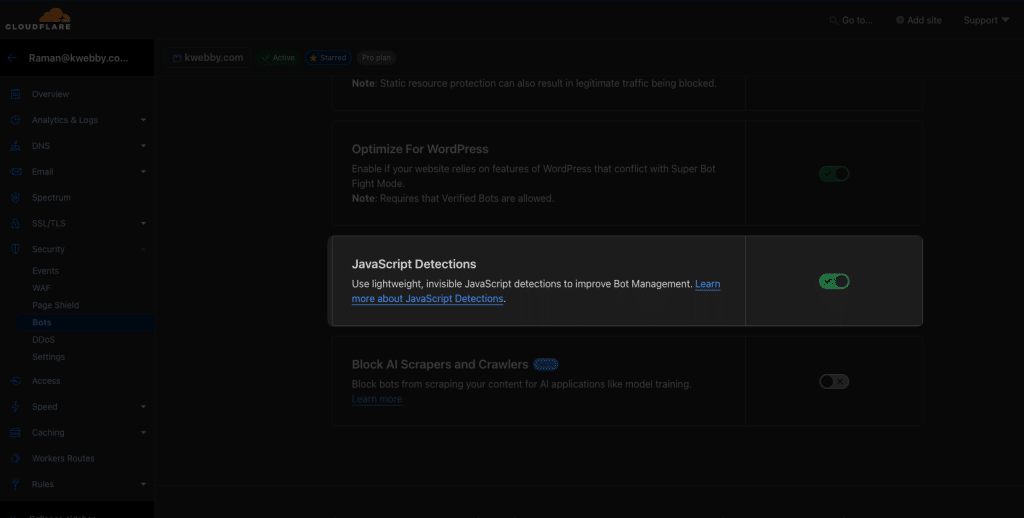

- Enable JavaScript Detection:

Turn on JavaScript detection if it’s not already on. This will allow Cloudflare to detect if a visitor is a human or a bot and block bots effectively.

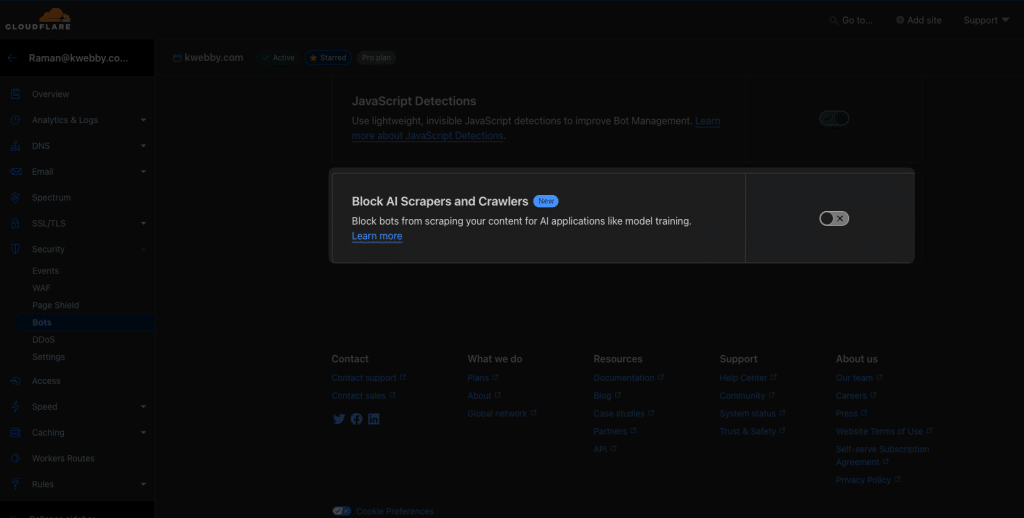

- Block AI Scrapers and Crawlers:

Finally turn on the setting to block AI scrapers and crawlers. This is an extra layer of protection to block sophisticated scraping technologies that can bypass standard protections.

By following these steps you can use Cloudflare’s Super Bot Fight Mode to secure your site, reduce the risks of scraping and keep your legitimate users happy.

All done!

In conclusion, protecting your site from targeted scraping requires a multi layered approach. By configuring your robots.txt to block known bad bots and using Cloudflare’s Super Bot Fight Mode you can secure your site and keep it performing.

This will block unwanted automated traffic and allow legitimate search engine bots to crawl without interference and protect your site’s integrity and usability.

Test your knowledge

Take a quick 5-question quiz based on this page.