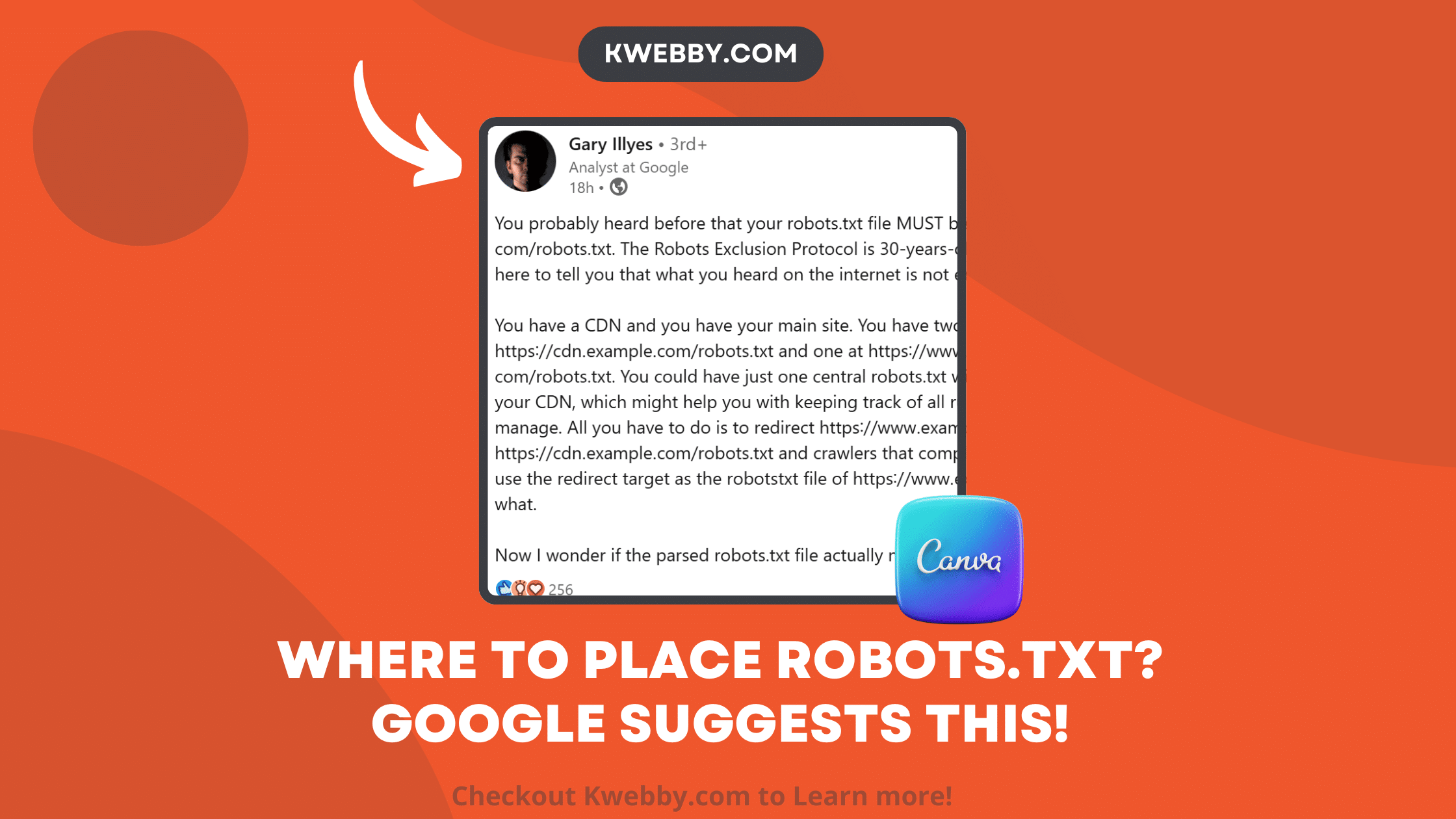

In a recent LinkedIn post, Google Analyst Gary Illyes has sparked an enlightening discussion that could reshape how webmasters approach the fundamental task of placing their robots.txt files.

Traditionally, it has been widely accepted that a website’s robots.txt file must be placed at the root domain, such as example.com/robots.txt, to effectively guide web crawlers. However, Illyes delved into a nuanced element of the Robots Exclusion Protocol (REP), revealing that this long-held belief is not as rigid as once thought.

This revelation invites us to reconsider our practices and empowers webmasters with a deeper understanding of the flexibility possible in managing their site’s crawl directives.

Let’s explore the implications of this newfound knowledge and how it can optimize our approach to robots.txt file placement.

What does Google say?

Google Analyst Gary Illyes recently shared insights that challenge the conventional wisdom regarding the placement of robots.txt files. Let’s break down the key points he discussed, which could revolutionize the way webmasters manage their site’s crawl directives:

- Traditional Placement: Traditionally, it is believed that a robots.txt file has to be located at the root of the domain, e.g., `https://www.example.com/robots.txt`. This location is meant to guide web crawlers in accessing or restricting various parts of the website.

- Flexibility with REP: The Robots Exclusion Protocol (REP), which has been around for 30 years, might not be as inflexible as previously thought. Illyes highlighted that the notion of robots.txt files needing to be at the root is not entirely accurate.

- Multiple Robots.txt Files: If you have a website with different subdomains or use a Content Delivery Network (CDN), you could indeed have multiple robots.txt files. For instance, one could be at `https://cdn.example.com/robots.txt` and another at `https://www.example.com/robots.txt`.

- Centralized Robots.txt File: A more efficient way might be to have a single, centralized robots.txt file. You can host this file on your CDN and include all the crawl rules within it. This approach simplifies the management of crawl directives.

- Redirection Strategy: To implement a single robots.txt file, you can redirect `https://www.example.com/robots.txt` to `https://cdn.example.com/robots.txt`. According to RFC 9309-compliant crawlers, they will use the redirect target as the effective robots.txt file for `https://www.example.com/`.

- Simplified Management: This method helps in keeping track of all crawling rules effectively. By centralizing the management, you reduce the complexity that arises from maintaining multiple robots.txt files for different parts of your site.

- Future Considerations: Illyes also posed an interesting question about whether the parsed robots.txt file needs to be explicitly named “robots.txt”. This insight opens further discussion and exploration into even more adaptable and streamlined practices for managing crawl directives.

By understanding and leveraging this flexibility, webmasters can optimize their strategies for guiding web crawlers, enhancing site management, and maintaining a clear and effective approach to SEO practices.

Here’s the LinkedIn post;

What are the Benefits of this to you?

- Centralized Management: By consolidating robots.txt rules in one location, it becomes easier to maintain and update crawl directives across your entire web presence.

- Improved Consistency: A single source of truth for robots.txt rules minimizes the risk of having conflicting directives between your main site and CDN, ensuring more uniform control over web crawlers.

- Flexibility: This strategy offers more adaptable configurations, which is particularly beneficial for websites with complex architectures, multiple subdomains, or those utilizing various CDNs.

- Enhanced Site Management: A streamlined approach to managing robots.txt files can significantly simplify overall site management, reducing administrative overhead.

- Boosted SEO Efforts: Improved consistency and efficiency in managing crawl directives can lead to better SEO performance, as search engines will have a clearer and more accurate understanding of which parts of your site to index.

Conclusion

In conclusion, Google’s recent insights on the flexibility of robots.txt placement offer a transformative opportunity for webmasters. By embracing a centralized management approach, you can significantly streamline your site’s crawl directives, ensuring more consistent and efficient control over web crawlers.

This not only simplifies the administrative burden but also optimizes your SEO efforts, ultimately contributing to a more robust online presence.

With the newfound understanding of how to leverage robots.txt files effectively, webmasters are now better equipped to navigate the evolving landscape of web management and maximize their site’s potential. So, let’s take this knowledge forward, re-evaluate our practices, and aim for a more harmonious and effective web environment.

Test your knowledge

Take a quick 5-question quiz based on this page.