In the digital marketing world, controlling your website’s visibility to search engines is key. Enter the X-Robots-Tag, a powerful but often overlooked tool to fine-tune how your content interacts with search engine crawlers.

Whether you want to stop certain pages from being indexed or tell search engines how to handle your content, the X-Robots-Tag is your new best friend for optimising your online presence.

In this post, we’ll go into what the X-Robots-Tag is, how it works and best practices for using it so you can get the most out of your website’s SEO.

What is the X-Robots-Tag?

The X-Robots-Tag might sound technical but it’s a simple yet powerful tool to optimise your website’s SEO. It’s an optional part of the HTTP response header that tells search engines how to crawl and index your web pages.

Unlike the robots meta tag which can only be applied to HTML pages the X-Robots-Tag can be applied to non-HTML files including images, text files and PDFs. So you can manage the visibility of different content types with ease.

For example if you have a PDF document with sensitive information that shouldn’t be in search results you can use the X-Robots-Tag in the HTTP response to tell search engines not to index it. Imagine your server responding with something like this:

HTTP/1.1 200 OK

Date: Tue, 30 Aug 2024 11:38:17 GMT

Content-encoding: gzip

X-Robots-Tag: noindexThis lets you control not just how your website pages are indexed but also how external files are treated by search crawlers so you have a more comprehensive approach to managing your online presence. You can even specify directives for specific user agents as in this example:

HTTP/1.1 200 OK

Date: Tue, 30 Aug 2024 11:38:17 GMT

Content-encoding: gzip

X-Robots-Tag: googlebot: noarchive, nofollowBy using the X-Robots-Tag you get an extra layer of control that can really help your digital presence.

X-Robots-Tags Derivatives

As we get into the X-Robots-Tag more we need to understand its derivatives. Google’s specifications say that any directive used in a robots meta tag can also be used as an X-Robots-Tag. While the full list of valid indexing and serving directives is long the most common ones are:

- noindex: This tells search engines not to show the page, file or media in search results. For example:

X-Robots-Tag: noindex- nofollow: When this is used it tells search engine crawlers not to follow the links on the page or document. For example:

X-Robots-Tag: nofollow- none: This is like a combination of “noindex, nofollow” so it restricts visibility and link following. Example:

X-Robots-Tag: none- noarchive: By using this directive you prevent search engines from showing a cached version of the page in their results. Example:

X-Robots-Tag: noarchive- nosnippet: This tells search engines not to show a snippet or preview of the page in search results so you can keep the element of surprise for the user. For example:

X-Robots-Tag: nosnippetUse these directives wisely. For the full list of directives accepted by Google for example, notranslate, noimageindex check their documentation. By using these you can keep your website intact and visible as per your strategy.

Where to Add X-robots-tag

To use the X-Robots-Tag on your website you need to put it in the right configuration files depending on the server you’re using. Below are step by step instructions for both Apache and Nginx servers so you can optimize your website visibility easily.

Apache Server (htaccess)

- Access Your Server’s Files: Use an FTP client or your web hosting provider’s file manager to connect to your server.

- Locate the .htaccess File: Go to the root directory of your website. This is usually the public_html or www folder. Look for the .htaccess file. If it doesn’t exist you can create one using a text editor.

- Edit the .htaccess File: Open the .htaccess file for editing. You can use any text editor you like, Notepad or TextEdit.

- Add X-Robots-Tag Directive: Put the X-Robots-Tag directive in the file. Here’s an example that tells search engines not to index all files in the /private-files/ directory:

<Directory "/home/username/public_html/private-files">

Header set X-Robots-Tag "noindex"

</Directory>- Save: After adding your directives save the file and close the editor.

- Test: To check if the tag is working use an online tool or the browser’s developer console to check the HTTP response header of your page.

Nginx Server (nginx.conf)

- Access Your Server’s Configuration: Use SSH or your control panel to access your Nginx server configuration file, usually named nginx.conf.

- Backup Your Configuration File: Before you make any changes backup the original nginx.conf file. This will allow you to restore the previous configuration if something goes wrong.

- Edit the nginx.conf File: Open the nginx.conf file with a text editor. You may need superuser (root) privileges to edit this file.

- Set the X-Robots-Tag Directive: Add the following in the server block to prevent all search engines from indexing the /private-files/ directory:

location /private-files/ {

add_header X-Robots-Tag "noindex";

}- Save and Exit: After adding your directive save and exit the editor.

- Test: To make sure your directives work reload Nginx with sudo nginx -s reload. Then test the implementation by checking the HTTP response headers of any files within the location.

By following these steps for either Apache or Nginx, you’ve added the X-Robots-Tag to your server configuration. Now you have more control over how search engines interact with your content and your website’s SEO.

How do I find my X-robots-tag for my webpage?

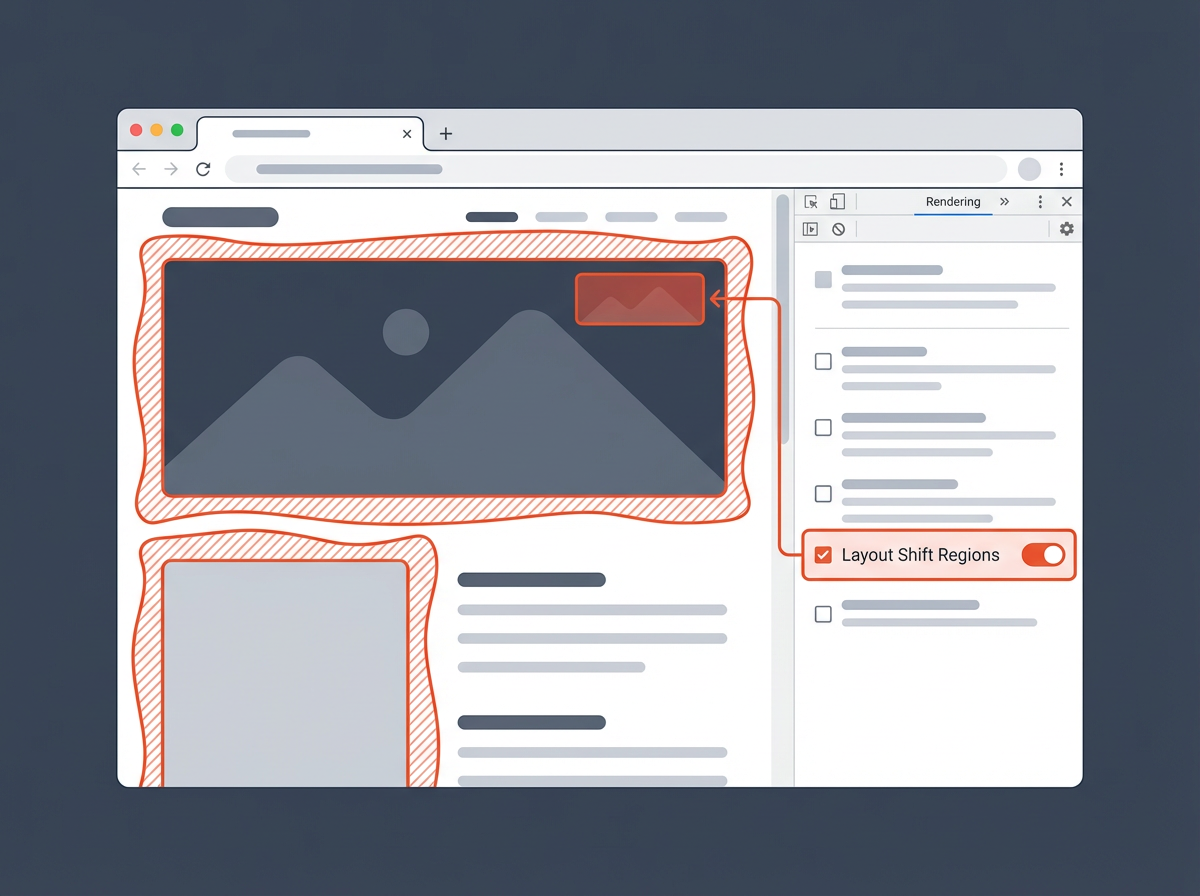

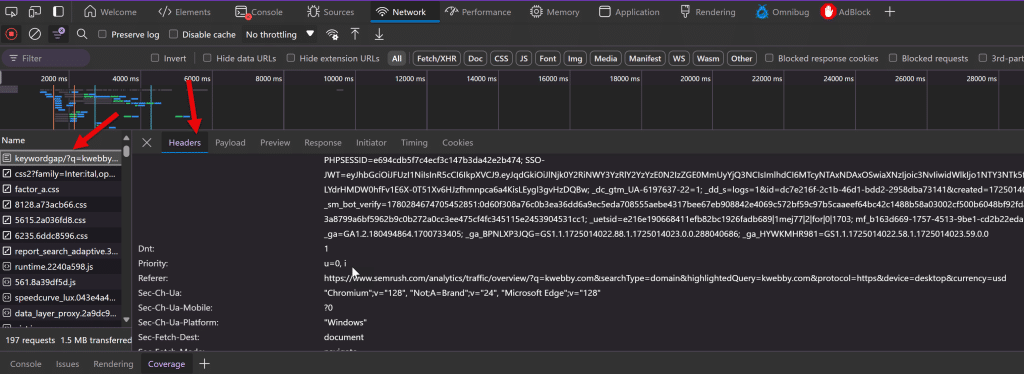

To check the X-Robots-Tag for a specific webpage you need to dive into the HTTP response headers, this tag is not part of the HTML markup. It might seem a bit complex at first but with a few simple steps you’ll be able to find it. Here’s how you can view the HTTP response headers in Google Chrome:

- Load the Page: Enter the URL of the webpage you want to inspect into Google Chrome.

- Open Developer Tools: Right-click anywhere on the page and select “Inspect” to open the developer tools.

- Select the Network Tab: Go to the “Network” tab in the developer tools.

- Reload the Page: Refresh the page to gather network data. This will populate the Network panel with all the files and resources loaded by the page.

- Choose the File: Select the file from the list on the left side of the panel.

- View HTTP Headers: Once the file is selected you’ll see the HTTP headers information on the right side of the panel. Here you can find the X-Robots-Tag if it’s set.

By following these steps you can check the X-Robots-Tag for any webpage and know how search engines are being told to index or not index your content.

X-Robots-tag vs Robots Meta Tag

| Feature | Robots Meta Tag | X-Robots-Tag |

|---|---|---|

| Usage | Inserted within the <head> section of an HTML page. | Included in the HTTP response header. |

| Content Control | Controls how individual pages are indexed and served by search engines. | Controls indexing of various resources, including non-HTML files like PDFs, images, and videos. |

| Customization | Allows targeting specific crawlers using user agent tokens (e.g., googlebot, googlebot-news). | Allows setting rules for specific crawlers by specifying user agents in the HTTP header. |

| Example | <meta name="robots" content="noindex"> | X-Robots-Tag: noindex |

| CMS Compatibility | May require CMS customization or search engine settings page to insert meta tags. | Applied via server configuration, making it suitable for resources where direct HTML modification isn’t possible. |

| Case Sensitivity | Both the name and content attributes are case-insensitive. | The header, user agent name, and specified values are not case-sensitive. |

Examples of X-Robots-Tag

Here are a few scenarios where the X-Robots-Tag is better than the robots meta tag for managing content:

- Blocking Non-HTML Resources: Suppose you have a PDF document with sensitive information you don’t want search engines to index. With the X-Robots-Tag you can prevent this PDF from showing up in search results by adding the following HTTP header response:

HTTP/1.1 200 OK

X-Robots-Tag: noindexThis will make search engines respect your wishes even for file types that don’t allow meta tags.

- Controlling Access for Different Crawlers: If you want to block indexing for the images on your site for one crawler but allow another to see them, the X-Robots-Tag allows you to target based on user agents. For example:

HTTP/1.1 200 OK

X-Robots-Tag: googlebot: noindex

X-Robots-Tag: bingbot: indexThis is flexibility the meta tag can’t offer.

- Combining Multiple Directives: Often you need to manage content visibility with multiple directives at once. The X-Robots-Tag can do this easily. For example if you want to block indexing and also tell search engines not to archive the content you could use:

HTTP/1.1 200 OK

X-Robots-Tag: noindex, noarchiveThis ability to combine content instructions in one header makes the X-Robots-Tag a must have in any webmaster’s SEO toolbox.

Google Answers: Is It “OK” to miss X-robots-tag on your website

In a recent SEO Office Hours session, Google’s John Mueller answered a common question about the X-Robots-Tag. Eric Richards asked:

“It looks like I’m missing an X-Robots-Tag. How do I resolve this issue?”

Here’s what John said:

- Not an Issue: John said missing the X-Robots-Tag is not an issue. It’s just a mechanism for specific directives.

- Purpose of Tags: He explained the X-Robots HTTP header and robots meta tag are only relevant if you want search engines to treat a page differently. For example if you want a page to be no-indexed these tags can tell search engines to apply a “no-index” directive.

- Default Treatment: If a page doesn’t have an X-Robots-Tag or a Robots meta tag it will be treated like any other page. That means it will be indexed by search engines based on default behaviour.

- Indexing is Possible: Most importantly John said without these tags the page can and will be indexed.

This answers the flexibility of SEO and that missing X-Robots-Tag is not an issue unless you need to control indexing.

In summary the X-Robots-Tag gives you options to control how search engines index your content but missing it isn’t the end of the world – understanding what it does lets you make informed decisions about your website’s visibility and ultimately your SEO.

Test your knowledge

Take a quick 5-question quiz based on this page.