Scraping user accounts on Instagram is an effective method for gathering data and gaining insights into user behaviours and trends.

In this guide, we will explore how to achieve this using Python, a powerful programming language that offers extensive libraries and frameworks for web scraping tasks.

We’ll be utilizing Visual Studio Code as our integrated development environment, ensuring a streamlined coding experience. Key Python libraries such as Selenium will play a crucial role, allowing us to automate and navigate web pages effortlessly.

Furthermore, tools like ChromeDriver will be essential for simulating a browser environment and ensuring seamless interaction with Instagram’s web interface. With these tools at our disposal, we’ll demystify the process and equip you with the skills needed to scrape Instagram user accounts efficiently and responsibly.

Setup Environment

Download and Setup

First, download Visual Studio Code and Python from their respective official websites according to your operating system:

- Visual Studio Code: Visit Visual Studio Code’s official website to download the version that is compatible with your OS.

- Python: Head over to Python’s official website and select the installer that matches your operating system.

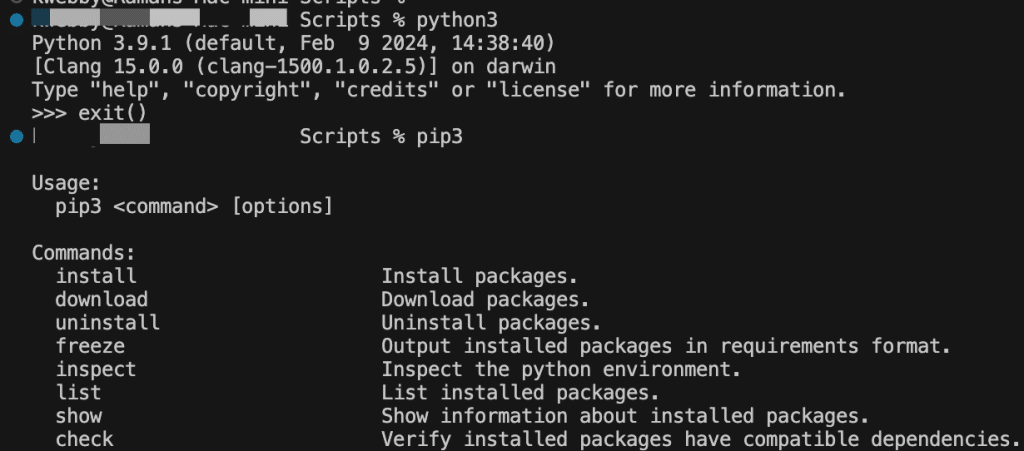

- PiP: Once Python is installed, make sure to also install PiP (Python Package Index), a tool that helps manage and install Python packages. This can be done by running the command `python get-pip.py` in your terminal or command prompt.

Create Folder

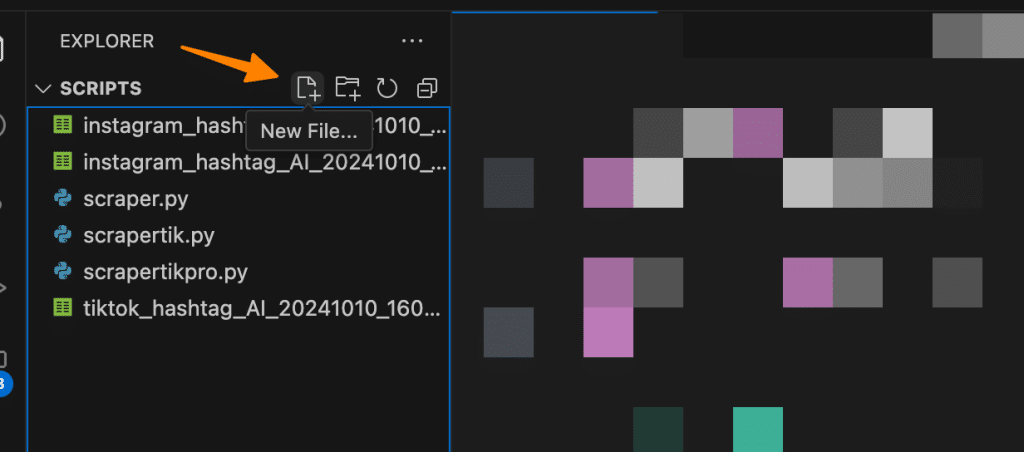

Once both installations are complete, proceed by creating a dedicated folder for your project.

Navigate to your desktop or another location of your choice and create a new folder named `scraper`.

Open this folder using Visual Studio Code, which you can do by selecting “Open Folder” from the File menu.

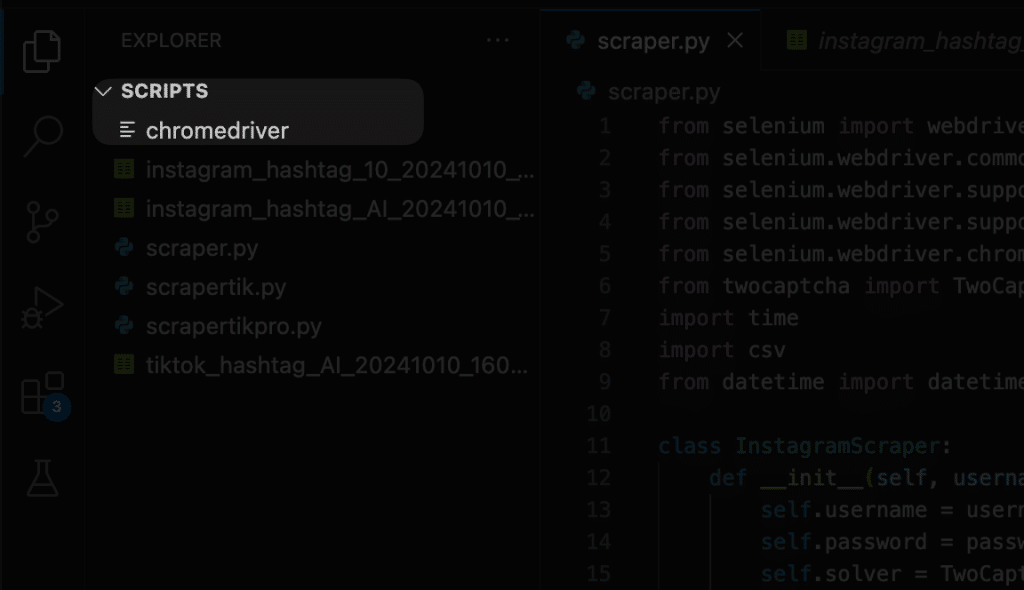

Create a Blank Scraper File

Create a new file named `scraper.py` inside the’ scraper’ folder.

This file will be where you write your Python script for scraping Instagram user accounts. With this setup, you’re ready to begin coding in an organized workspace that will streamline your development process.

Download Chrome Driver to Same Folder

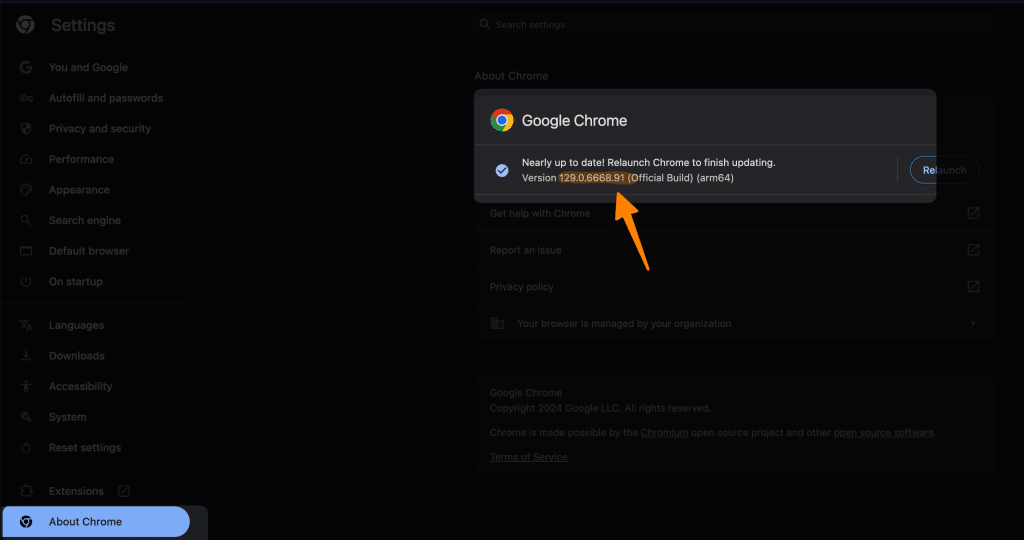

Check Your Chrome Version:

Open Google Chrome. Click on the three vertical dots in the top-right corner to access Chrome settings. Navigate to “Help” and then select “About Google Chrome.” A new tab will open displaying your current Chrome version (e.g., Version 92.0.4515.107).

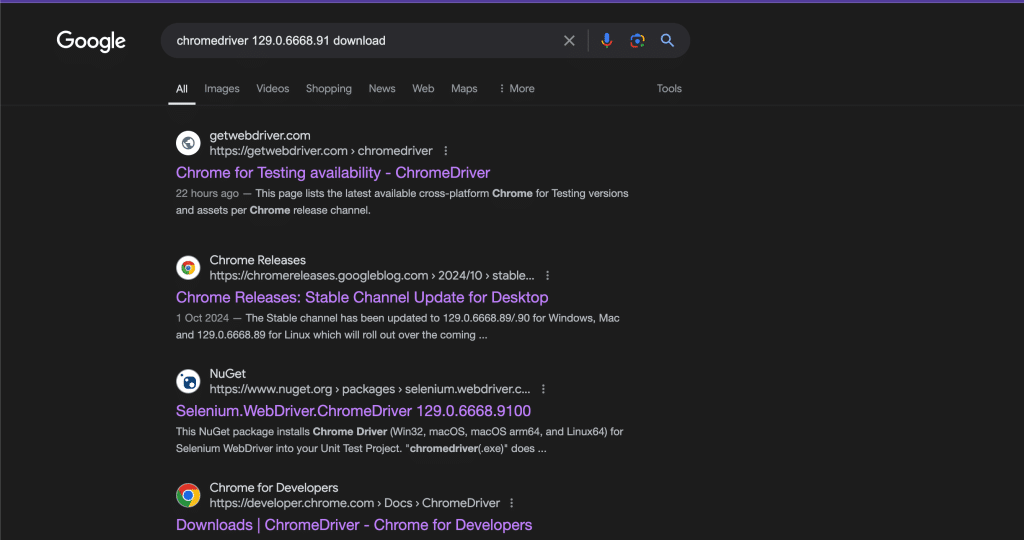

Search for ChromeDriver:

Now that you have your Chrome version, open a new tab in your web browser. Type “chromedriver 92.0.4515.107 download” into the search bar, replacing “92.0.4515.107” with your actual Chrome version.

Download ChromeDriver:

Click on the first link in the search results that offers a download. Ensure that the link is specifically for “ChromeDriver,” not Chrome itself. Typically, the official source will be from a site like chromedriver.chromium.org.

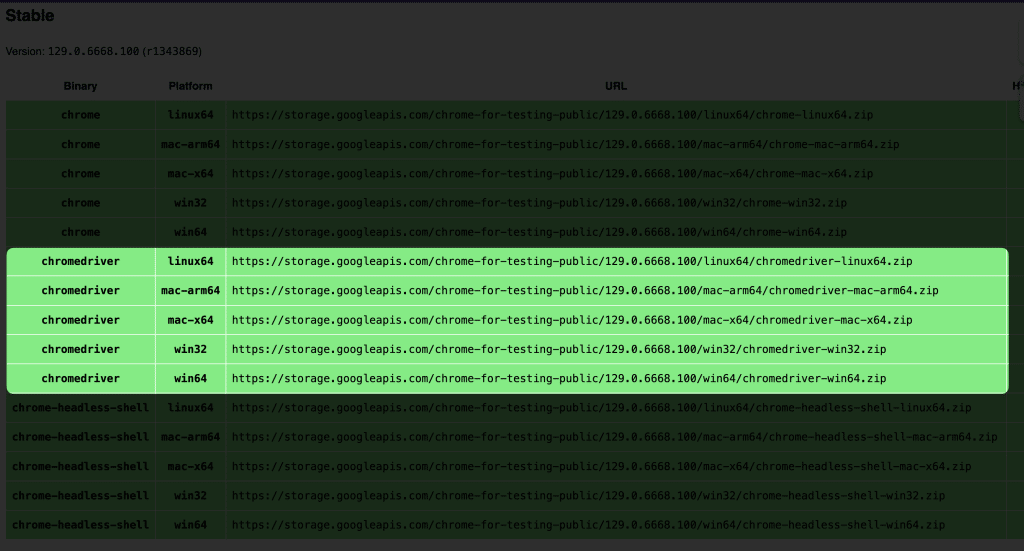

Select and Download the Appropriate File:

On the ChromeDriver download page, select the ChromeDriver version that matches your Chrome version. Download the appropriate file for your operating system (Windows, Mac, or Linux).

Save and Extract ChromeDriver:

Once the download is complete, navigate to your Downloads folder (or the folder where you saved the file). Locate the downloaded ChromeDriver zip file, and extract its contents into your `scraper` folder that you created earlier.

By completing these steps, you’ll have the ChromeDriver executable set up in your project directory, ready for seamless integration into your web scraping tasks.

Scrape Instagram User Accounts

To efficiently scrape Instagram user accounts, follow these detailed step-by-step instructions to set up and execute your Python script.

Prepare Your Python Script:

Copy and paste the provided Python script into your `scraper.py` file located in the `scraper` folder. This script will govern the scraping process;

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.service import Service

from twocaptcha import TwoCaptcha

import time

import csv

from datetime import datetime

class InstagramScraper:

def __init__(self, username, password, api_key):

self.username = username

self.password = password

self.solver = TwoCaptcha(api_key)

# Chrome driver setup

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--no-sandbox')

chrome_options.add_argument('--disable-dev-shm-usage')

service = Service('/Users/kwebby/Downloads/chromedriver_mac_arm64/chromedriver')

self.driver = webdriver.Chrome(service=service, options=chrome_options)

self.wait = WebDriverWait(self.driver, 10)

def login(self):

try:

print("Logging in to Instagram...")

self.driver.get('https://www.instagram.com/accounts/login/')

time.sleep(5)

username_input = self.wait.until(EC.presence_of_element_located((By.NAME, 'username')))

username_input.send_keys(self.username)

print("Username entered")

password_input = self.driver.find_element(By.NAME, 'password')

password_input.send_keys(self.password)

print("Password entered")

login_button = self.driver.find_element(By.CSS_SELECTOR, "button[type='submit']")

login_button.click()

print("Login button clicked")

time.sleep(10)

return True

except Exception as e:

print(f"Login failed: {str(e)}")

return False

def scrape_hashtag(self, hashtag, max_posts=50):

csv_filename = f'instagram_hashtag_{hashtag}_{datetime.now().strftime("%Y%m%d_%H%M%S")}.csv'

try:

print(f"Opening hashtag page: #{hashtag}")

self.driver.get(f'https://www.instagram.com/explore/tags/{hashtag}/')

time.sleep(5)

with open(csv_filename, 'w', newline='', encoding='utf-8') as csvfile:

writer = csv.writer(csvfile)

writer.writerow(['Post URL', 'Profile URL', 'Username'])

print(f"Created CSV file: {csv_filename}")

posts_processed = 0

processed_urls = set()

while posts_processed < max_posts:

print(f"Currently processed {posts_processed} posts")

post_links = self.driver.find_elements(By.CSS_SELECTOR, 'a[href*="/p/"]')

for link in post_links:

if posts_processed >= max_posts:

break

try:

post_url = link.get_attribute('href')

if post_url in processed_urls:

continue

print(f"\nProcessing post: {post_url}")

self.driver.execute_script("arguments[0].click();", link)

time.sleep(3)

# Wait for the post modal to appear

article = self.wait.until(EC.presence_of_element_located((By.TAG_NAME, 'article')))

try:

# Find the header element that contains the username link

header = article.find_element(By.CSS_SELECTOR, 'header')

# Get the profile link and username

profile_link = header.find_element(By.CSS_SELECTOR, 'a')

profile_url = profile_link.get_attribute('href')

username = profile_url.split('/')[-2] # Extract username from profile URL

print(f"Found profile URL: {profile_url}")

print(f"Found username: {username}")

# Write to CSV

writer.writerow([post_url, profile_url, username])

csvfile.flush()

print(f"Saved to CSV: {post_url}, {profile_url}, {username}")

except Exception as e:

print(f"Error extracting profile info: {str(e)}")

writer.writerow([post_url, '', ''])

csvfile.flush()

posts_processed += 1

processed_urls.add(post_url)

# Close the post modal

close_button = self.driver.find_element(By.CSS_SELECTOR, 'svg[aria-label="Close"]')

close_button.click()

time.sleep(2)

except Exception as e:

print(f"Error processing post: {str(e)}")

try:

self.driver.find_element(By.CSS_SELECTOR, 'svg[aria-label="Close"]').click()

except:

pass

continue

# Scroll to load more posts

self.driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(3)

print(f"\nScraping completed. Total posts processed: {posts_processed}")

print(f"Data saved to: {csv_filename}")

except Exception as e:

print(f"Error during scraping: {str(e)}")

return csv_filename

def close(self):

self.driver.quit()

def main():

try:

username = input("Enter your Instagram username: ")

password = input("Enter your Instagram password: ")

api_key = input("Enter your 2captcha API key: ")

hashtag = input("Enter the hashtag to scrape (without #): ")

max_posts = int(input("Enter maximum number of posts to scrape: "))

scraper = InstagramScraper(username, password, api_key)

if scraper.login():

print("Successfully logged in to Instagram")

csv_file = scraper.scrape_hashtag(hashtag, max_posts)

print(f"Scraping completed. Results saved to: {csv_file}")

else:

print("Failed to login to Instagram")

scraper.close()

except Exception as e:

print(f"An error occurred: {str(e)}")

if __name__ == "__main__":

main()Install Necessary Dependencies:

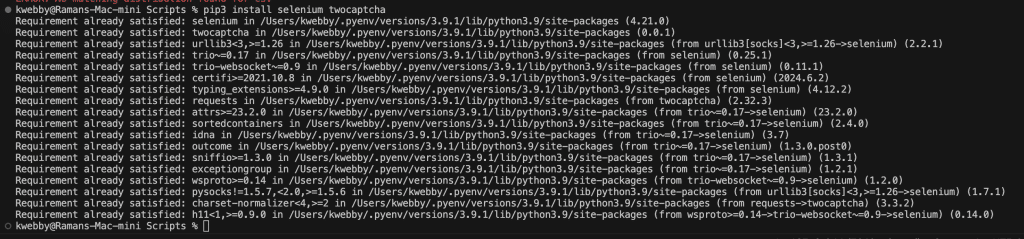

Open your terminal or command prompt and navigate to your `scraper` directory.

Install the required Python libraries by executing the command:

pip install selenium twocaptcha csv time datetimeIf you’re using Python 3, the command will be:

pip3 install selenium twocaptchaNote: The `twocaptcha` library is optional and is primarily needed if you encounter bot tests when using servers.

Update ChromeDriver Path

Go to line 22 in your `scraper.py` file, where the ChromeDriver path is specified.

Right-click the ChromeDriver executable located in your `scraper` folder, select “Copy path,” and paste this path into the appropriate line in your script.

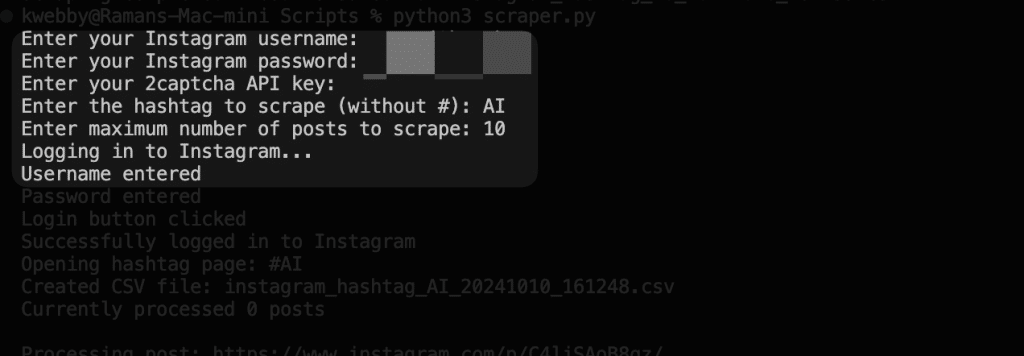

Execute the Script:

Once dependencies are installed and the path is updated, run the code by typing the following command in your terminal:

python scraper.pyOr for Python 3:

python3 scraper.pyProvide User Input

When prompted, enter the required inputs as follows:

Instagram username: Enter your Instagram username.

Instagram password: Provide your Instagram password. Don’t worry; this will be securely stored locally on your PC.

2captcha API key: (Optional) Enter your 2captcha API key if needed.

Hashtag to scrape: Input a hashtag (e.g., foryou, viral) without the `#` symbol.

Maximum number of posts to scrape: Specify how many posts you want to scrape, such as 10 or 20.

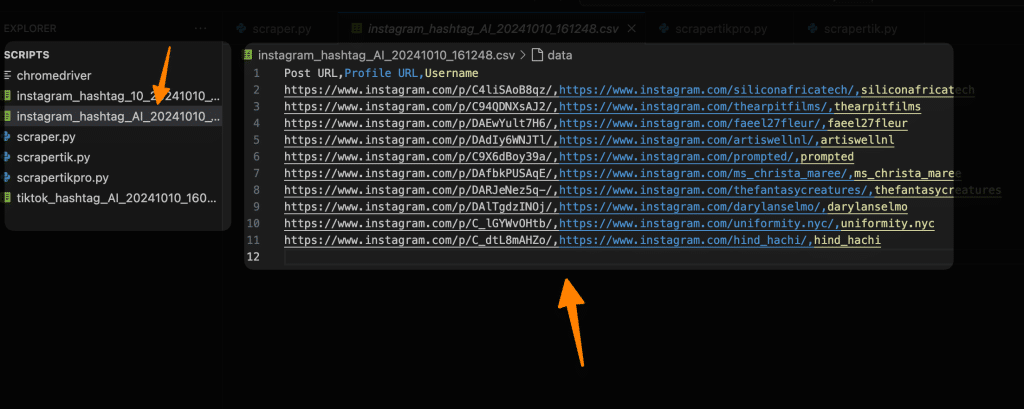

The Result Data

You Will find the result data in the same folder with the .csv extension; you can select it and find all the details in it, i.e. Post URL, Profile URL and username.

Final Thoughts!

In conclusion, setting up and executing a Python script to scrape Instagram user accounts can be both an exciting and rewarding endeavor.

By following the step-by-step instructions outlined in this guide, you have ensured a structured approach to efficiently extract data from Instagram. From installing essential tools like Visual Studio Code and Python, configuring ChromeDriver, to implementing your script with all necessary dependencies, each step has been meticulously crafted to facilitate a smooth scraping process.

Remember, maintaining a secure environment by safely storing your credentials and responsibly using the scraping results will empower you to harness the full potential of Instagram data while adhering to privacy norms.

Whether for research, marketing, or personal interest, this setup opens a world of data-driven possibilities.