Well-structured robots.txt files help to direct those search engine bots to make their work smoother. Having control over the search engine can manipulate who crawls and indexes your sites.

If you want your site to rank in the top lists of search engine results then you’ll have to make it easy for search engine bots to explore it efficiently. You can take control of your search engine and yes it is possible!

This is one of the simplest files for websites, but if you did it correctly then it will be a boon, if you messed up with it, will cause chaos on your SEO and disturb all your search engines from accessing important content of your website.

To make it stable in condition, the robots.txt file helps you out. The crawling behaviour is sometimes known as ‘spidering’ which may sound funny!

Robots.txt is a text file which resides within your root directory that informs robots, dispatched by search engines, which pages to crawl and which to just overlook.

By this, you have a little idea about how powerful this tool is! If you utilise it correctly then it will increase crawl frequency which can impact SEO efforts.

So, How to use this file? What to avoid? How does this work? Here is the blog to find the answers to your questions.

What is a robots.txt file?

When you create a new website, search engines will send their search bots to crawl through your website and make a sitemap of what that page contains. In this way, they’ll know what exact pages to show when someone searches using the related keywords.

Robot exclusion protocol (REP), is a set of web standards that regulates how robots crawl to the web, index content and serve the content to users and robots.txt is a part of it. Robots.txt is an execution of this protocol that delineates the guideline that every robot should follow, including Google bots.

Be careful while making changes in the robots.txt file, that file must have the potential to make big parts of your website inaccessible to search engines. a robots.txt is only valid if you have a full domain, including protocol.

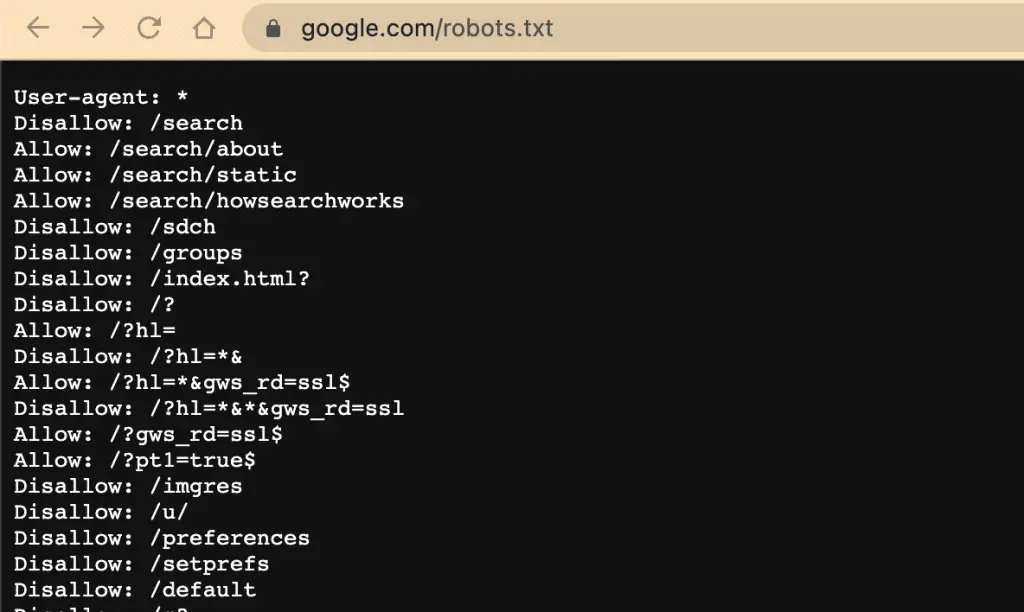

You can also take a quick sneak peek of any website by typing any URL and adding/robots.txt file at the end, simple.

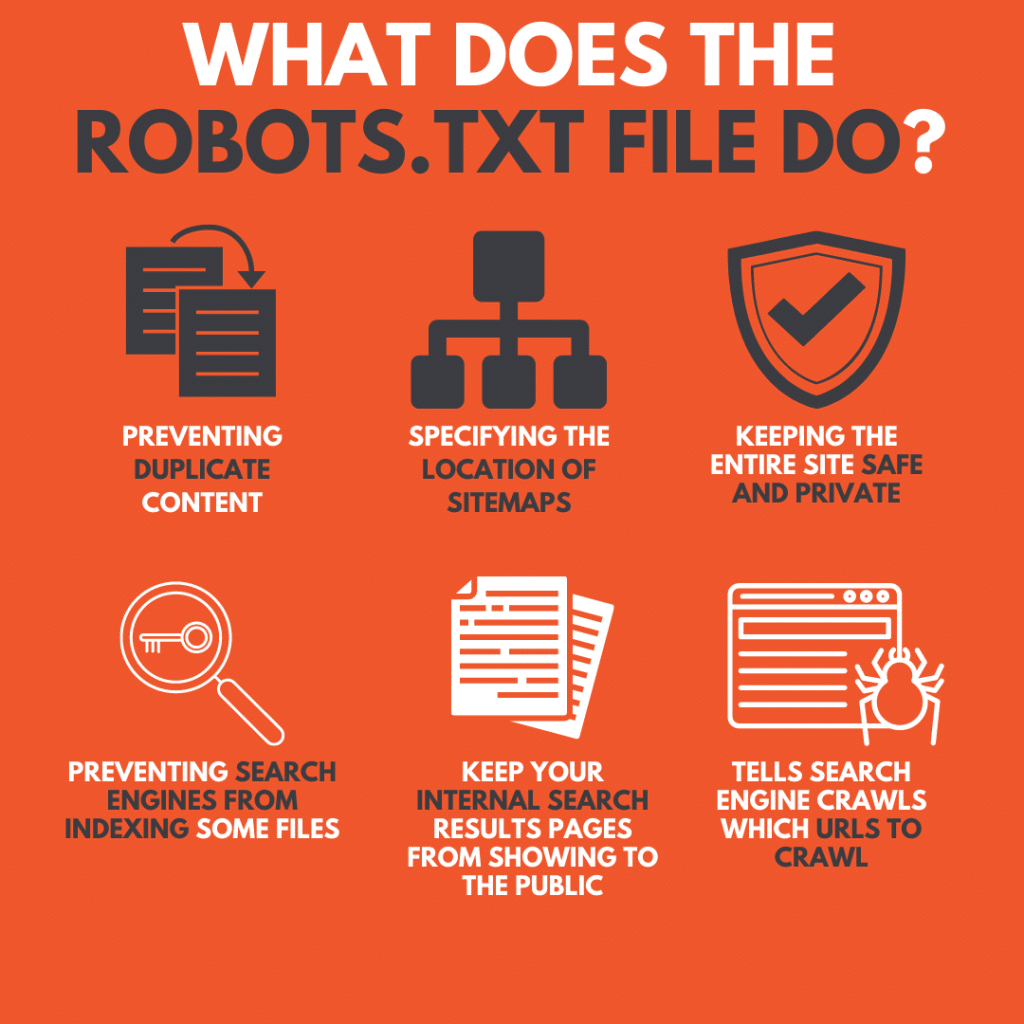

What does the robots.txt file do?

Robots.txt file controls the unnecessary crawler to get access to certain areas of your sites. If you ever disallow Google bots to crawl your site this might lead to extreme danger.

Some of the uses are:

- Preventing duplicate content that appears in SERPs by using meta robots.

- Specifying the location of sitemaps

- Keeping the entire site safe and private

- Preventing search engines from indexing some files on your site

- Keep your internal search results pages from showing to the public

- Tells search engine crawls which URLs crawl to can access your sites

In your site, if there is no area you want to control user agents’ access, then you’ll not need a robots.txt file.

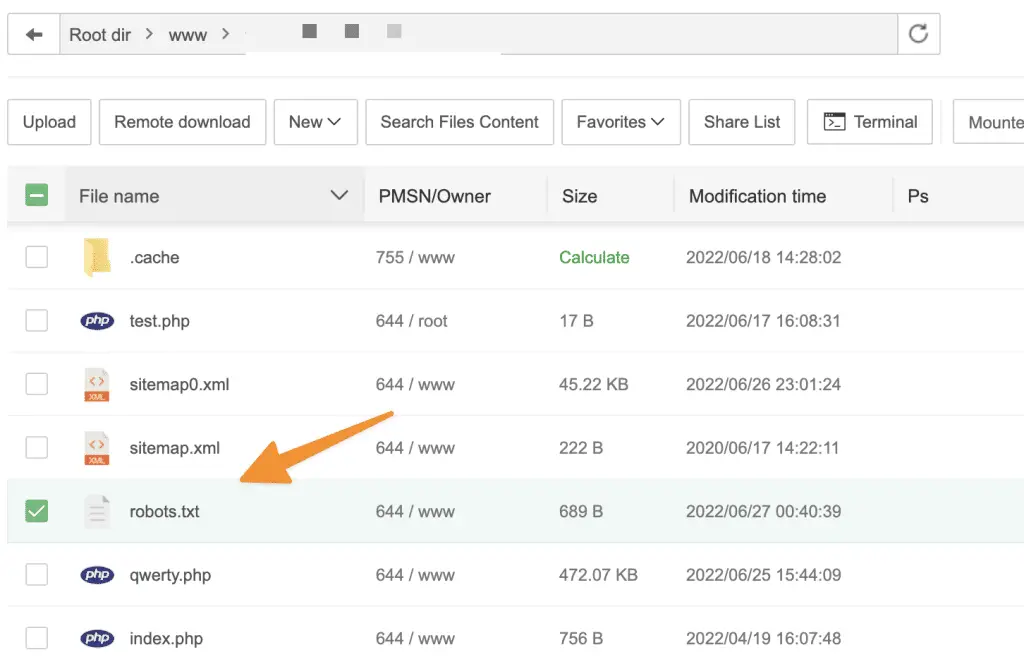

Where should I put or find my robots.txt file?

Robots.txt is a text-based file, so it is super easy to create. You can simply create it on your notepad, like a text editor. Open that sheet and just save it as a robots.txt file.

Then log in to your cPanel and locate a public HTML folder to access your site’s root directory. After that drag those files into it.

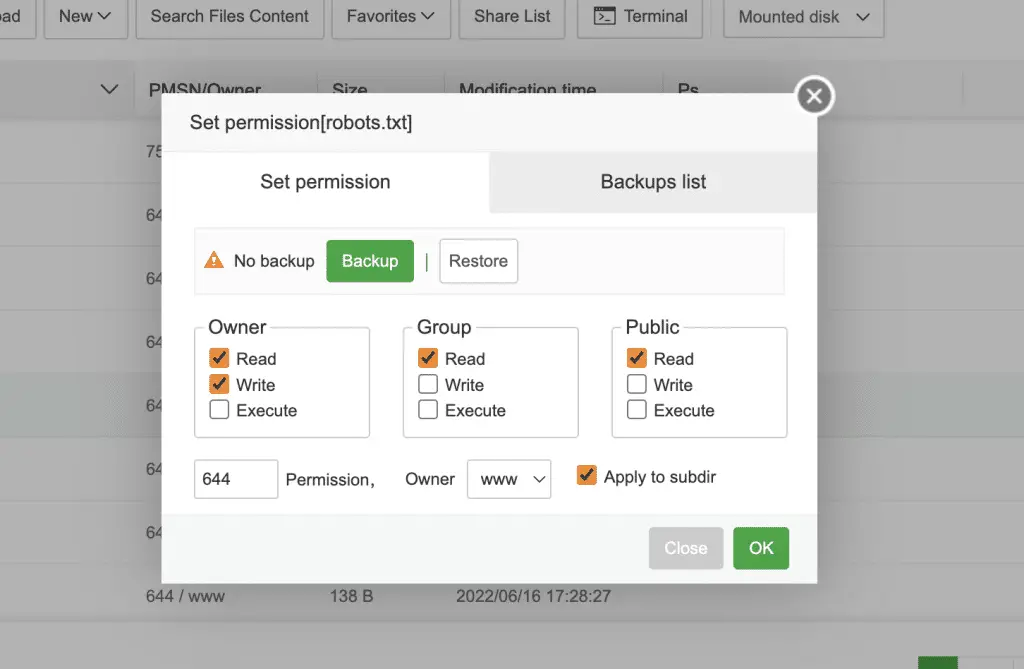

Then ensure that you have the correct permission for your file. As an owner, you need to read, write and execute the file and no one can get control over it.

A file will display the “644” permission code. If it is not showing this code then you’ll have to change it through file permission.

Now yes, your robots.txt file is ready!

How you can find your robots.txt file is another question. For that, you have to open FTP cPanel and there you’ll find the file of your public_html website directory.

Once you open the file you’ll be greeted by some message, which will look like this.

How big sites use robots.txt?

Some popular sites use robots.txt to guide the search engine crawlers that what they should do and what not.

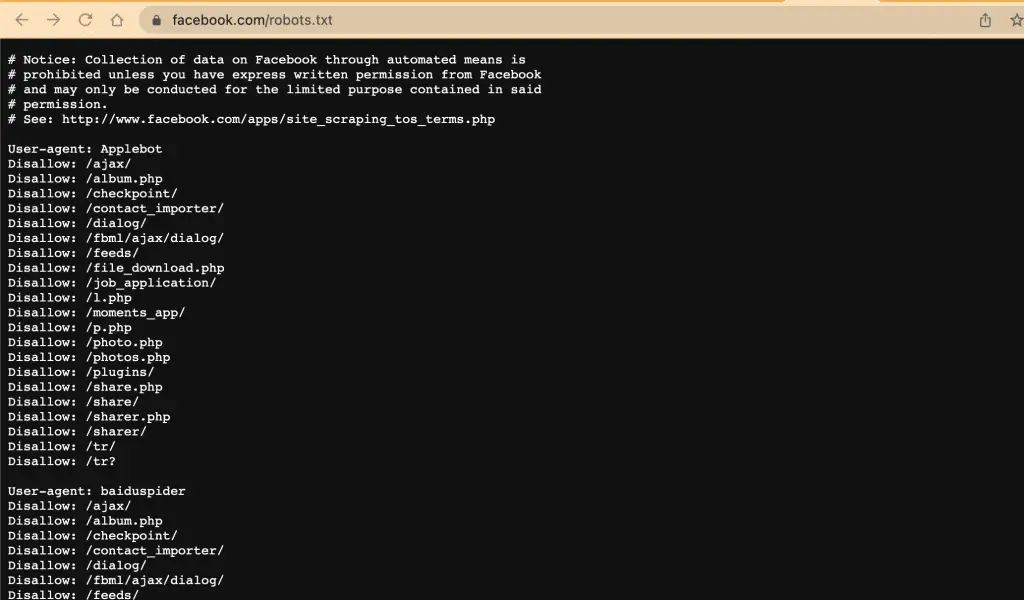

One of the examples is Facebook.com; if you open their robots.txt file, You will see how they want different bots to navigate their website and for the most part they disallow various section of their website like “share”, “plugins” etc;

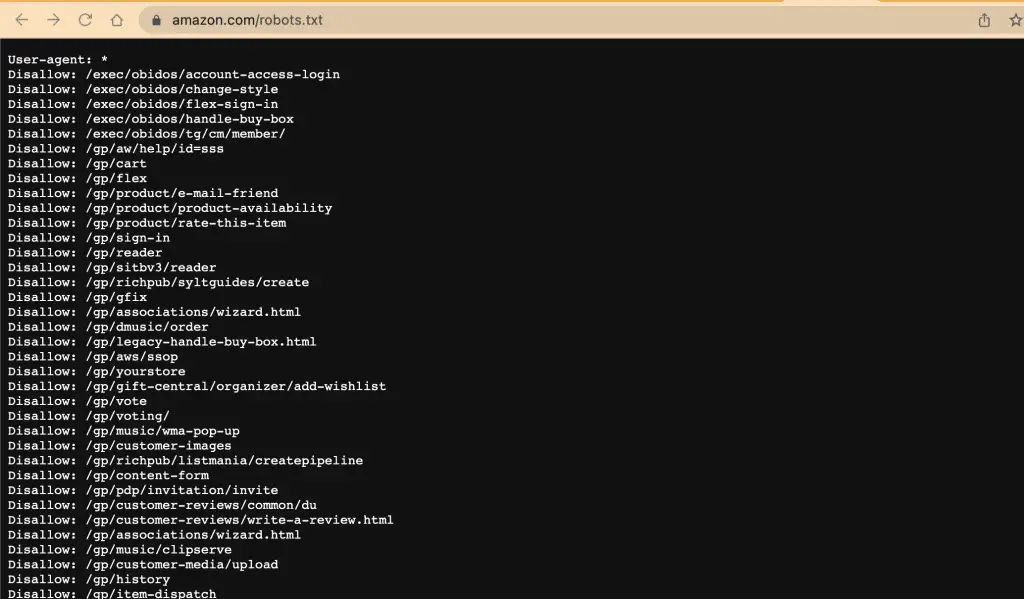

Another example is Amazon.com; if you try to access their robots.txt file, You will see different patter they use if we compare to the Facebook above, they use a global variable i.e. * for all bots and direct all of them to disallow certain directories which are mentioned in their file;

Pros and cons of using robots.txt

There are some pros and cons of using the robots.txt file. Pros are:

- Help to prevent duplicate content

- If you are reworking your websites, you can use the robots.txt file to hide unfinished pages from being indexed.

- If you want to tell search engines where your sitemap is located.

- It helps to manage what pages are indexed and ignored by search engines.

- You can block any non-SEO pages and add a URL to your sitemap.

Cons are :

- It may not be supported by all search engines.

- Give the attacker the location of the site’s directory and private data.

- If you set up your setting incorrectly, then it causes search engines to delete all indexed data.

Robots.txt syntax

A robots.txt file is made up of different directives and each of them begins with user agents.

There are various search engines and different search engines interpret them differently.

First, matching directives always wins, but the chance with Bing and Google is always high!

We can say robots.txt syntax is the language of the robots.txt file. There are five common terms you may come across – user agents, allow, disallow, crawl delay and sitemap.

Sitemap directive tells search engines where to find an XML sitemap.

The user-agents

Each search engine identifies itself by user agents as Google identifies as Googlebot, Yahoo identifies as Slurp and Bing as BingBot and the list goes on. User agents mean a specific crawler bot to which you’re instructing, this can be a search engine.

Any program on the internet has a ‘user agent’ or we can say the assigned name. For human users, this contains information like operating system version and browsing type that does not contain personal information.

If you see in the first few lines in the block, that is user agents. It will pinpoint specific bots. User agents identify which crawler the rules apply to.

The most common user agents for search engine spiders

There are thousands of web crawlers and user agents wandering the internet, but these are some most common ones:

- Googlebot: one of the most popular ones, used for gathering the web page information used to supply SERP.

- Bingbot: was created by Microsoft in 2010 to supply information to their Bing search engine.

- Slurp Bot: yahoo search results have come from the Yahoo web crawler Slurp, which collects content from partner sites

- Duckduckbot: web crawler for DuckDuckGO, known for privacy

- Baidspider: Chinese Baidu search engine’s web crawling spiders, crawl web pages and gives an update to the Baidu index

- Yandex Bot: it is the largest Russian search engine crawler, many different strings user agents can show, tap here

- Sogou Spider: it is a leading Chinese search engine launched at 2004

The disallow directive

If you see any second line in a block of directives then it’s called a Disallow directive. You can use this to specify that user agents are not allowed to crawl this URL.

Here’s an example of a The disallow directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Disallow: /admin/

Disallow: /private-directory/

It means these particular areas are disallowed for bots. You can simply tell search engines not to access certain areas, files or pages.

The allow directive

Some leading crawlers support an Allow directive, which can compensate for disallow directive. You can use this to specify that user agents are allowed to crawl this URL. It’s only applicable to Google bots.

Here’s an example of a The allow directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Allow: /cgi-bin/forum/

It is used to counteract a disallowed directory. It is supported by Bing and Google. Bing uses allow and disallow directive, whichever is more distinct, based on length, like Google.

The noindex directive

The noindex directive is not a part of the Robots.txt standard, but it’s widely used by webmasters. It is a meta tag that can be placed in the HTML header of a page to instruct search engines not to index the page.

Here’s an example of a The noindex directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Noindex: /private.html

The nofollow directive

The Robots META Tag has an attribute called “nofollow” that can be used to instruct some search engines that a hyperlink should not influence the link target’s search engine ranking.

Here’s an example of a The nofollow directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Nofollow: /private.html

The sitemap directive

The Sitemap directive is not part of the Robots.txt standard, but it’s widely supported by all major search engines. It allows webmasters to include a sitemap location in Robots.txt file.

Here’s an example of a The sitemap directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Sitemap: http://www.example.com/sitemap.xml

The host directive

The Host directive is not part of the Robots.txt standard, but it’s supported by all major search engines. It allows webmasters to specify the preferred domain name for a site that has multiple domain names.

Here’s an example of a The host directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Host: www.example.com

The crawl-delay directive

Crawl-delay directive means how many seconds the crawler can wait to load any content and crawl web page content. Yahoo, Yandex and Bing are good when it comes to crawling and they respond to crawl-delay directives.

Here’s an example of a crawl delay directive Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Crawl-delay: 10

This means you can make the search engine wait for 5 seconds to crawl your site or 5 seconds before re-accessing your site, it is the same, but just has slight differences depending upon the search engine.

Googlebot does not respond to this directive, but the crawl rate can be set in Google Search Console. It says that, avoid using crawl-delay directives for search engines.

How to use wildcard/ regular expressions

So now we understand what Robots.txt file is and where you should put your file and their agents too. But now you might have questions like,’” I have my eCommerce website and I would like to disallow all the pages which contain question marks or any other unwanted signs in their URLs”.

Here then comes our wildcard to help. There are some things you have considered. First, you don’t need to append wildcards to every string in your robots.txt file and the second thing is you have to know that there are two types of wildcards:

*wildcard: The *wildcards will simply match any sequence character. This type of wildcard will be great for URLs which have the same pattern.

Example of Using *wildcard :

User-agent: *

Disallow: /*?

The above Robots.txt will disallow all the pages which contain question mark in their URLs. So, if your website has a lot of pages with question mark then this Robots.txt will be good for you. But what if your website has some pages with question mark and some pages without question mark?

$wildcard: The $wildcard is used to show the end of the URL. If you see your robots are not allowing the bots from accessing the PDFs, then this wildcard might come in handy.

Example of using $Wildcard:

User-agent: *

Disallow: /*.pdf$

This Robots.txt will help you to disallow bots from accessing all the PDFs of your website. So, this is how you can use Robots.txt to control what search engine bots can and cannot access your site. Robots.txt is a helpful tool that can be used to improve your website’s SEO.

Wildcards are not just used for defining the user agents, but also can be used to match URLs. Wildcards are supported by Google, Yahoo and Ask. Remember, it’s always great to double-check your robots.txt wildcards before publishing them.

Validate your robots.txt

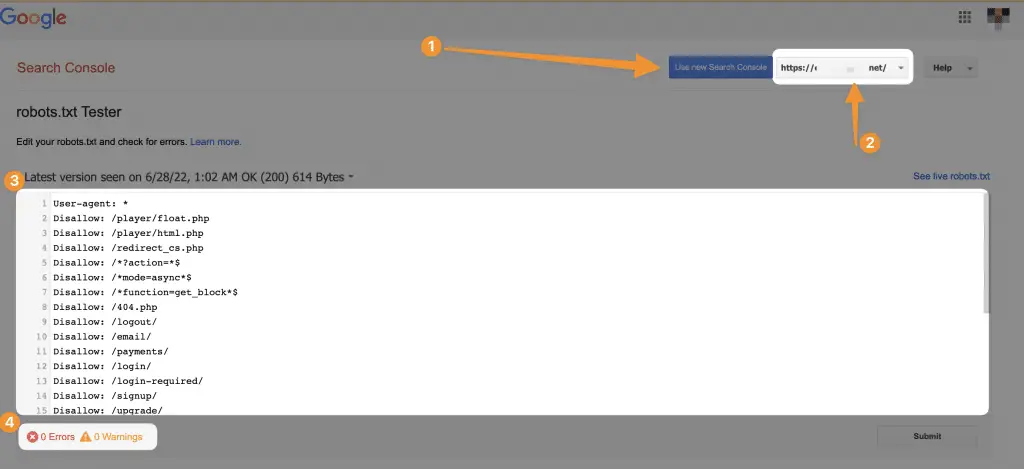

If you are using robots.txt then you have to test it in the robots.txt tester for whether it is blocking Google web crawls from any URLs on your sites.

You can use the robots.txt tester tool to check whether the Googlebot – Image crawler can crawl the file you want to block from Google Image Search.

You can use any program that produces the text file or you can simply use an available option that is Google Webmaster. Once you create your robots.txt file then add this to your top directory of the server. After that make sure you set the correct permission for the visitors to read anytime.

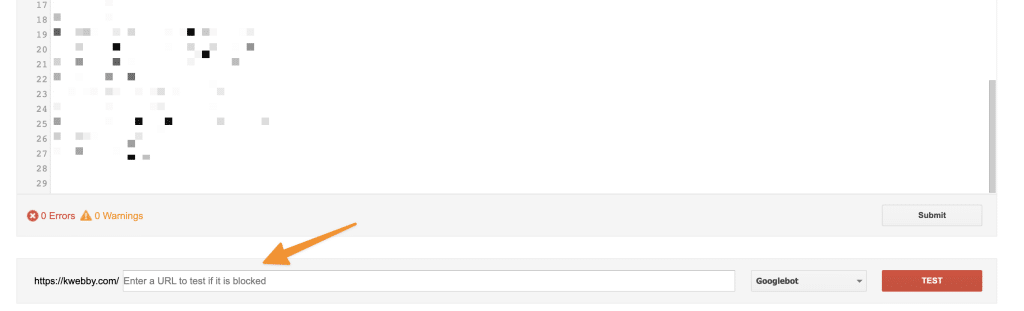

You can also test if your URL is blocked by Googlebot or not by using the form just below the tool;

Final code

The Robots.txt file is important for all websites, especially eCommerce sites which have a lot of products and categories. If you don’t have a Robots.txt file or if it’s not correctly configured, search engines might index all your pages, which can result in lower rankings because of duplicate content.A Robots.txt file should be placed in the root directory of your website. The Robots.txt file is a text file and must be named “robots.txt”.

Here’s an example of a Robots.txt file:

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /~joe

/

Allow: /~joe/public_html/

Sitemap: http://www.example.com/sitemap.xml

The above Robots.txt file will block all bots from the /cgi-bin/, /tmp/ and /~joe/ directories, and it will allow the bot to crawl the pages in the /~joe/public_html/ directory. It will also tell the bots where to find your sitemap.

If you want to learn more about Robots.txt or if you need help creating a Robots.txt file for your website, we recommend that you contact a professional SEO company.

Generate robots.txt file using our tool Kwebby Robot TXT Generator

You can generate your Robots.txt file using our very own Robots.txt File Generator tool;

Here are the options you need to consider;

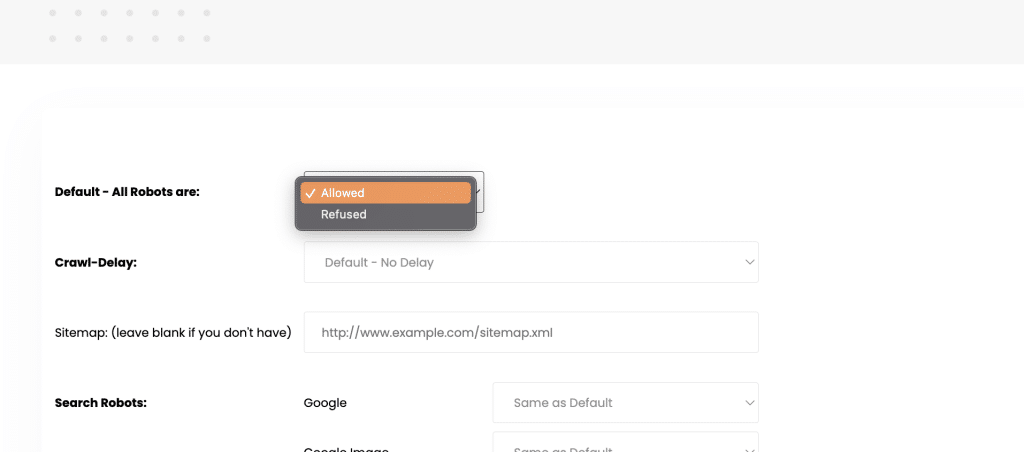

Option 1: All robots are either allowed or refused, If you have a private app which don’t have information available for public then you can select “Refused” otherwise you can allow it;

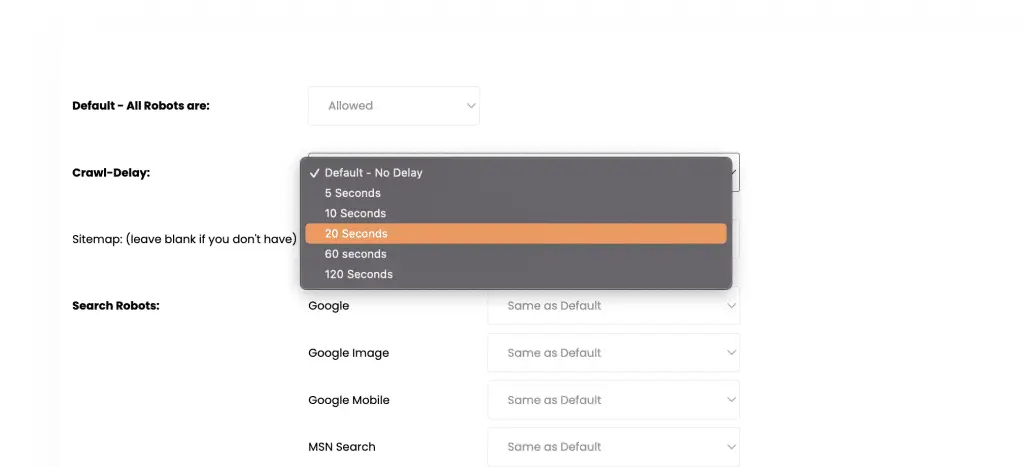

Option 2: Crawl Delay option enables to delay the bots for some interval time to reduce server loading, If your site is big and gets lots of traffic then you should enable it for 10 seconds otherwise there’s no point of delaying it for the starter or medium sized websites;

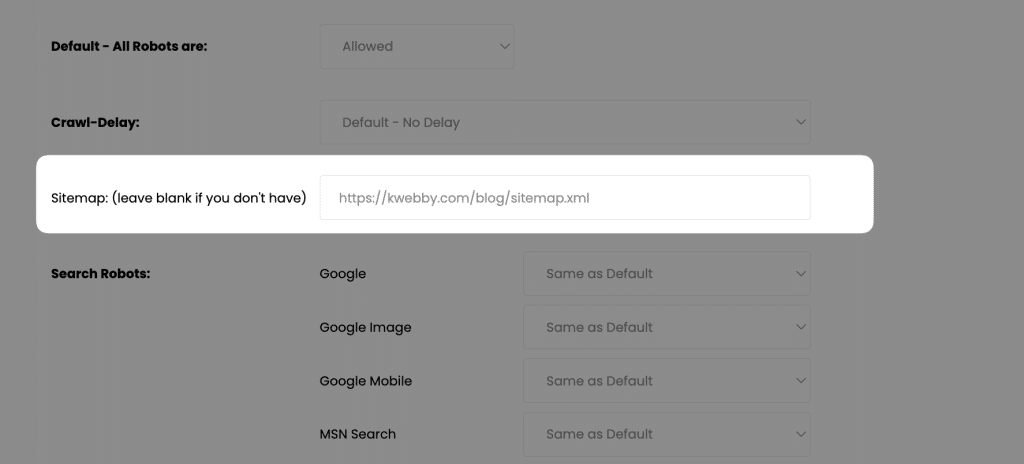

Option 3: This option allows you to include Sitemap URL of your website to let the robot knows where your sitemap is and crawl only the necessary URLs which is mentioned in the sitemap itself.

Generally, Your sitemap file is situated at root folder i.e. example.com/sitemap.xml or example.com/sitemap_xml, copy the same URL and paste it in the option;

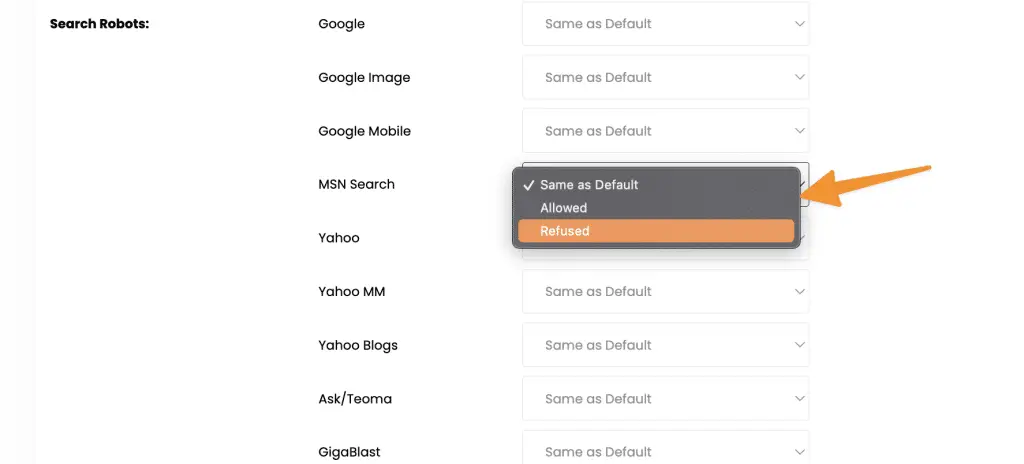

Option 4: In this section, you can allow or disallow some search robots you don’t want to crawl your website. Just look at the list of bots and let select either “allow” or “refused” to enable or disable them to crawl your website;

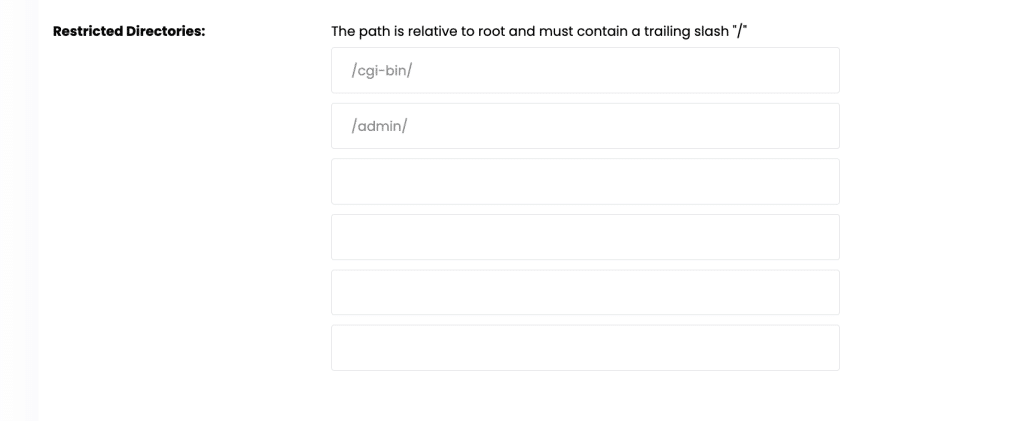

Option 5: Restricted directories let you disallow some directories which you don’t want bots to crawl, which is generally files URLs or private administrative directories i.e. “/admin”, “/login” etc.

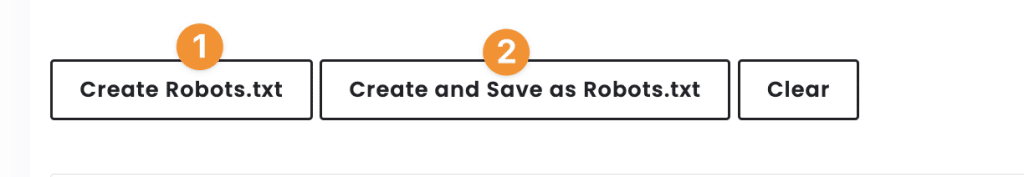

Now, you have an option to create or save the robots.txt file and paste it to your root folder.

Why is robots.txt important for SEO?

Robots.txt file plays a vital role in SEO. A robots.txt file tells the search engine crawls which URLs crawler can have access on your site. This factor is used to avoid overloading your site. If you want to keep the page out of Google, then block indexing with no index or password to protect your page.

A big part of SEO is sending the right signals at the right time to search engines, and robots.txt is the best way to communicate your crawling preference to search engines.

For SEO’s best results, make sure you are not blocking any of the content that you want to be crawled. Search engines will cache the robots.txt content but usually update that content once a day. If you want this processor to get faster then you can submit your robots.txt URL to Google.

Do not use robots.txt for any private data, because others can get direct access to your information, it may still get indexed. For that use some page passwords to protect your data. Robots.txt file is case sensitive, that’s why the file must be named in lower case like robots.txt, not a ROBOTS.TXT.

Other tips for robots.txt

We have talked about every detailed function of robots.txt and now let’s move a little deeper into it and understand how each may turn into an SEO disaster if not utilised properly.

You mustn’t block any good content that you want to present publicly by robots.txt or noindex tag and being crawled. If you do it wrongly, it might hurt SEO. And as we previously said don’t overuse crawl- delay as it leads to limiting your pages crawled by the bots.

This can be good for some websites but if you have a huge website then you are doing trouble by losing all solid traffic. Robots.txt file is case sensitive as mentioned early, you have to call it ‘robots.txt’ in lower case otherwise, it won’t work!

Frequently Asked Questions (FAQs)

Why Is the Robots.txt file shown as a Soft 404 Error in Google Search Console?

The question is asked on SEO Work Hours August 2024 Edition. It was noticed by the user that the robots.txt URL had been shown as a Soft 404 error on the Google search console.

John Mueller Answered this;

This one’s easy. That’s fine. You don’t need to do anything. The robots.txt file generally doesn’t need to be indexed. It’s fine to have it be seen as a soft 404.

Therefore, If you see such errors, then do not bother, its fine to be in soft 404 error.

Can I Hide My Admin Login Page Using Robots.txt file from Search Crawlers?

You can do this effortlessly using Robots.txt, hide your login page from search crawlers, and prevent them from indexing.

For example, if your admin login page is located at “www.example.com/admin/login”, you can add the following line in your Robots.txt file:

Disallow: /admin/loginThis will prevent search engine crawlers from accessing and indexing your admin login page.

Conclusion

So this is all you need to understand. To increase your site’s exposure, you have to ensure that search engines are crawling the most relevant data. Here, we see how a well-structured robots.txt file will enable you to direct how bots interact with your site. These are the ultimate guides for your robots.txt that we hope you master now!

Test your knowledge

Take a quick 5-question quiz based on this page.